命令行部署#

前提#

HA集群依赖#

HDFS集群需要依赖ZooKeeper集群。

ZooKeeper安装部署请参考:ZooKeeper 安装。

ZooKeeper服务地址假定为zookeeper1:2181,zookeeper2:2181,zookeeper3:2181

Kerberos认证依赖(可选)#

若开启Kerberos认证,Kerberos安装部署请参考:Kerberos 安装。

Kerberos 服务地址假定为kdc1

Ranger认证依赖(可选)#

若开启Ranger认证,Ranger安装部署请参考:Ranger 安装。

Ranger 服务地址假定为ranger1

配置yum源并安装lava#

登录到hdfs1,然后切换到root用户

ssh hdfs1

su - root

在开始前,请配置yum源,安装lava命令行管理工具

# 从yum源所在机器(假设为192.168.1.10)获取repo文件

scp root@192.168.1.10:/etc/yum.repos.d/oushu.repo /etc/yum.repos.d/oushu.repo

# 追加yum源所在机器信息到/etc/hosts文件

# 安装lava命令行管理工具

yum clean all

yum makecache

yum install -y lava

创建一个hdfshosts文件

touch ${HOME}/hdfshosts

配置hdfshosts内容为HDFS的所有hostname,其中假设hdfs1到hdfs3机器上安装HDFS的服务,hdfs4到hdfs6是额外安装的HDFS Client。

如果不在没有HDFS服务端机器上使用HDFS,可以不添加hdfs4到hdfs6

hdfs1

hdfs2

hdfs3

hdfs4

hdfs5

hdfs6

在首台机器上和集群内其他节点交换公钥,以便ssh免密码登陆和分发配置文件

# 和集群内其他机器交换公钥

lava ssh-exkeys -f ${HOME}/hdfshosts -p ********

# 将repo文件分发给集群内其他机器

lava scp -f ${HOME}/hdfshosts /etc/yum.repos.d/oushu.repo =:/etc/yum.repos.d

安装#

准备#

创建一个hdfshost文件

touch ${HOME}/hdfshost

配置hdfshost内容为所有安装HDFS的服务的hostname

hdfs1

hdfs2

hdfs3

创建nnhostfile,包含HDFS NameNode节点

touch ${HOME}/nnhostfile

配置nnhostfile内容为HDFS的NameNode节点hostname:

hdfs1

hdfs2

创建jnhostfile文件

touch ${HOME}/jnhostfile

内容为配置JournalNode的节点hostname:

hdfs1

hdfs2

hdfs3

创建dnhostfile文件

touch ${HOME}/dnhostfile

配置dnhostfile内容为HDFS的DataNode节点hostname:

hdfs1

hdfs2

hdfs3

安装HDFS

lava ssh -f ${HOME}/hdfshost -e 'yum install -y hdfs'

创建NameNode目录:

lava ssh -f ${HOME}/nnhostfile -e 'mkdir -p /data1/hdfs/namenode'

lava ssh -f ${HOME}/nnhostfile -e 'chmod -R 755 /data1/hdfs'

lava ssh -f ${HOME}/nnhostfile -e 'chown -R hdfs:hadoop /data1/hdfs'

创建DataNode目录:

lava ssh -f ${HOME}/dnhostfile -e 'mkdir -p /data1/hdfs/datanode'

lava ssh -f ${HOME}/dnhostfile -e 'mkdir -p /data2/hdfs/datanode'

lava ssh -f ${HOME}/dnhostfile -e 'chmod -R 755 /data1/hdfs'

lava ssh -f ${HOME}/dnhostfile -e 'chmod -R 755 /data2/hdfs'

lava ssh -f ${HOME}/dnhostfile -e 'chown -R hdfs:hadoop /data1/hdfs'

lava ssh -f ${HOME}/dnhostfile -e 'chown -R hdfs:hadoop /data2/hdfs'

lava ssh -f ${HOME}/dnhostfile -e 'mkdir -p /var/lib/hadoop-hdfs/'

lava ssh -f ${HOME}/dnhostfile -e 'chmod -R 755 /var/lib/hadoop-hdfs/'

lava ssh -f ${HOME}/dnhostfile -e 'chown -R hdfs:hadoop /var/lib/hadoop-hdfs/'

Kerberos准备(可选)#

如果开启Kerberos,则需要在所有HDFS节点安装Kerberos客户端。

lava ssh -f ${HOME}/hdfshost -e "yum install -y krb5-libs krb5-workstation"

在hdfs1节点执行下登录到kdc1机器上

ssh kdc1

mkdir -p /etc/security/keytabs

kadmin.local

进入控制台后执行下列操作,后面参数配置为了更直观,配置principal实体名直接使用HDFS角色名,方便对比。

注意:这里对应的hostname不管大小写,都需要用小写

addprinc -randkey namenode/hdfs2@OUSHU.COM

addprinc -randkey namenode/hdfs1@OUSHU.COM

addprinc -randkey datanode/hdfs1@OUSHU.COM

addprinc -randkey datanode/hdfs2@OUSHU.COM

addprinc -randkey datanode/hdfs3@OUSHU.COM

addprinc -randkey journalnode/hdfs1@OUSHU.COM

addprinc -randkey journalnode/hdfs2@OUSHU.COM

addprinc -randkey journalnode/hdfs3@OUSHU.COM

addprinc -randkey HTTP/hdfs1@OUSHU.COM

addprinc -randkey HTTP/hdfs2@OUSHU.COM

addprinc -randkey HTTP/hdfs3@OUSHU.COM

addprinc -randkey hdfs@OUSHU.COM

ktadd -k /etc/security/keytabs/hdfs.keytab hdfs@OUSHU.COM

ktadd -k /etc/security/keytabs/hdfs.keytab namenode/hdfs1@OUSHU.COM

ktadd -k /etc/security/keytabs/hdfs.keytab namenode/hdfs2@OUSHU.COM

ktadd -k /etc/security/keytabs/hdfs.keytab datanode/hdfs1@OUSHU.COM

ktadd -k /etc/security/keytabs/hdfs.keytab datanode/hdfs2@OUSHU.COM

ktadd -k /etc/security/keytabs/hdfs.keytab datanode/hdfs3@OUSHU.COM

ktadd -k /etc/security/keytabs/hdfs.keytab journalnode/hdfs1@OUSHU.COM

ktadd -k /etc/security/keytabs/hdfs.keytab journalnode/hdfs2@OUSHU.COM

ktadd -k /etc/security/keytabs/hdfs.keytab journalnode/hdfs3@OUSHU.COM

ktadd -norandkey -k /etc/security/keytabs/hdfs.keytab HTTP/hdfs1@OUSHU.COM

ktadd -norandkey -k /etc/security/keytabs/hdfs.keytab HTTP/hdfs2@OUSHU.COM

ktadd -norandkey -k /etc/security/keytabs/hdfs.keytab HTTP/hdfs3@OUSHU.COM

# 完成对应principal添加

生成的keytab进行分发:

ssh hdfs1

lava ssh -f ${HOME}/hdfshost -e 'mkdir -p /etc/security/keytabs/'

scp root@kdc1:/etc/krb5.conf /etc/krb5.conf

scp root@kdc1:/etc/security/keytabs/hdfs.keytab /etc/security/keytabs/hdfs.keytab

lava scp -r -f ${HOME}/hdfshost /etc/krb5.conf =:/etc/krb5.conf

lava scp -r -f ${HOME}/hdfshost /etc/security/keytabs/hdfs.keytab =:/etc/security/keytabs/hdfs.keytab

lava ssh -f ${HOME}/hdfshost -e 'chown hdfs:hadoop /etc/security/keytabs/hdfs.keytab'

配置#

HA配置#

为fs.defaultFS替换ha相关节点信息。

对于配置文件core-site.xml:

vim /usr/local/oushu/conf/common/core-site.xml

修改:

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://oushu</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>zookeeper1:2181,zookeeper2:2181,zookeeper3:2181</value>

</property>

<configuration>

对配置文件hdfs-site.xml修改如下。

vim /usr/local/oushu/conf/common/hdfs-site.xml

修改:

<configuration>

<property>

<name>dfs.domain.socket.path</name>

<value>/var/lib/hadoop-hdfs/dn_socket</value>

</property>

<property>

<name>dfs.block.access.token.enable</name>

<value>true</value>

</property>

<property>

<name>dfs.nameservices</name>

<value>oushu</value>

</property>

<property>

<name>dfs.ha.namenodes.oushu</name>

<value>nn1,nn2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.oushu.nn1</name>

<value>hdfs2:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.oushu.nn1</name>

<value>hdfs2:50070</value>

</property>

<property>

<name>dfs.namenode.rpc-address.oushu.nn2</name>

<value>hdfs1:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.oushu.nn2</name>

<value>hdfs1:50070</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hdfs1:8485;hdfs2:8485;hdfs3:8485/oushu</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled.oushu</name>

<value>true</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>shell(/bin/true)</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.oushu</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/data1/hdfs/journaldata</value>

</property>

</configuration>

对于hadoop-env.sh配置,可根据自身情况修改,如:

vim /usr/local/oushu/conf/common/hadoop-env.sh

export JAVA_HOME="/usr/java/default"

export HADOOP_NAMENODE_OPTS="-Xmx6144m -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction=70"

export HADOOP_DATANODE_OPTS="-Xmx2048m -Xss256k"

conf中的配置文件同步到所有节点:

lava scp -r -f ${HOME}/hdfshost /usr/local/oushu/conf/common/* =:/usr/local/oushu/conf/common/

KDC配置(可选)#

如果启用Kerberos配置,则执行下边配置

修改 HDFS 配置#

vim /usr/local/oushu/conf/common/core-site.xml

<property>

<name>hadoop.security.authentication</name>

<value>kerberos</value>

</property>

<property>

<name>hadoop.security.authorization</name>

<value>true</value>

</property>

<property>

<name>hadoop.rpc.protection</name>

<value>authentication</value>

</property>

vim /usr/local/oushu/conf/common/hdfs-site.xml

<!-- NameNode security config -->

<property>

<name>dfs.namenode.keytab.file</name>

<value>/etc/security/keytabs/hdfs.keytab</value>

</property>

<property>

<name>dfs.namenode.kerberos.principal</name>

<value>namenode/_HOST@OUSHU.COM</value>

</property>

<property>

<name>dfs.namenode.kerberos.internal.spnego.principal</name>

<value>HTTP/_HOST@OUSHU.COM</value>

</property>

<!-- Secondary NameNode security config -->

<property>

<name>dfs.secondary.namenode.keytab.file</name>

<value>/etc/security/keytabs/hdfs.keytab</value>

</property>

<property>

<name>dfs.secondary.namenode.kerberos.principal</name>

<value>namenode/_HOST@OUSHU.COM</value>

</property>

<property>

<name>dfs.secondary.namenode.kerberos.internal.spnego.principal</name>

<value>HTTP/_HOST@OUSHU.COM</value>

</property>

<!-- JournalNode security config -->

<property>

<name>dfs.journalnode.kerberos.principal</name>

<value>journalnode/_HOST@OUSHU.COM</value>

</property>

<property>

<name>dfs.journalnode.keytab.file</name>

<value>/etc/security/keytabs/hdfs.keytab</value>

</property>

<property>

<name>dfs.journalnode.kerberos.internal.spnego.principal</name>

<value>HTTP/_HOST@OUSHU.COM</value>

</property>

<!-- Web Authentication config -->

<property>

<name>dfs.web.authentication.kerberos.principal</name>

<value>HTTP/_HOST@OUSHU.COM</value>

</property>

<property>

<name>dfs.web.authentication.kerberos.keytab</name>

<value>/etc/security/keytabs/hdfs.keytab</value>

</property>

<!-- 配置对NameNode Web UI的SSL访问 -->

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.http.policy</name>

<value>HTTPS_ONLY</value>

</property>

<!-- 配置集群datanode的kerberos认证 -->

<property>

<name>dfs.datanode.keytab.file</name>

<value>/etc/security/keytabs/hdfs.keytab</value>

</property>

<property>

<name>dfs.datanode.kerberos.principal</name>

<value>datanode/_HOST@OUSHU.COM</value>

</property>

<property>

<name>dfs.data.transfer.protection</name>

<value>authentication</value>

</property>

<property>

<name>dfs.block.access.token.enable</name>

<value>true</value>

</property>

DataNode ssl 配置#

DataNode ssl 使用ca证书生成方式:

我们在第一台hdfs1的${HOME}目录执行下面操作

openssl req -new -x509 -passout pass:password -keyout bd_ca_key -out bd_ca_cert -days 9999 -subj "/C=CN/ST=beijing/L=beijing/O=m1_hostname/OU=m1_hostname/CN=m1_hostname"

此时会生成两个文件bd_ca_key和bd_ca_cert。

此为证书生成脚本,执行此脚本即可完成以上ssl配置步骤。

touch ${HOME}/sslca.sh

其中涉及到ca证书生成过程中需要使用的密码,需要设置为强密码。下边以{password}为例标识需要修改为强密码选项。

编辑脚本

for node in $(cat ${HOME}/hdfshost); do

lava ssh -h ${node} "mkdir -p /var/lib/hadoop-hdfs/"

#将key 和 cert分发到其他机器

lava scp -r -h ${node} ${HOME}/bd_ca_cert =:${HOME}

lava scp -r -h ${node} ${HOME}/bd_ca_key =:${HOME}

#证书生成的六步

lava ssh -h ${node} "keytool -keystore keystore -alias localhost -validity 9999 -importpass -keypass {password} -importpass -storepass {password} -genkey -keyalg RSA -keysize 2048 -dname 'CN=${node}, OU=${node}, O=${node}, L=beijing, ST=beijing, C=CN'"

lava ssh -h ${node} "keytool -keystore truststore -alias CARoot -importpass -storepass {password} -noprompt -import -file bd_ca_cert"

lava ssh -h ${node} "keytool -importpass -storepass {password} -certreq -alias localhost -keystore keystore -file cert"

lava ssh -h ${node} "openssl x509 -req -CA bd_ca_cert -CAkey bd_ca_key -in cert -out cert_signed -days 9999 -CAcreateserial -passin pass:{password}"

lava ssh -h ${node} "keytool -importpass -storepass {password} -noprompt -keystore keystore -alias CARoot -import -file bd_ca_cert"

lava ssh -h ${node} "keytool -importpass -storepass {password} -keystore keystore -alias localhost -import -file cert_signed"

#把证书copy到对应目录下 赋权

lava ssh -h ${node} "cp ${HOME}/keystore /var/lib/hadoop-hdfs/"

lava ssh -h ${node} "cp ${HOME}/truststore /var/lib/hadoop-hdfs/"

done

运行脚本生成证书

chmod 755 ${HOME}/sslca.sh

${HOME}/sslca.sh

增加ssl配置

证书的路径设置的是/var/lib/hadoop-hdfs/,所以ssl-client.xml和ssl-server.xml里面配置的路径也是这里。

在/usr/local/oushu/conf/common/下

ssl-client.xml

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<property>

<name>ssl.client.truststore.location</name>

<value>/var/lib/hadoop-hdfs/truststore</value>

</property>

<property>

<name>ssl.client.truststore.password</name>

<value>{password}</value>

</property>

<property>

<name>ssl.client.truststore.type</name>

<value>jks</value>

</property>

<property>

<name>ssl.client.truststore.reload.interval</name>

<value>10000</value>

</property>

<property>

<name>ssl.client.keystore.location</name>

<value>/var/lib/hadoop-hdfs/keystore</value>

</property>

<property>

<name>ssl.client.keystore.password</name>

<value>{password}</value>

</property>

<property>

<name>ssl.client.keystore.keypassword</name>

<value>{password}</value>

</property>

<property>

<name>ssl.client.keystore.type</name>

<value>jks</value>

</property>

</configuration>

ssl-server.xml

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<property>

<name>ssl.server.truststore.location</name>

<value>/var/lib/hadoop-hdfs/truststore</value>

</property>

<property>

<name>ssl.server.truststore.password</name>

<value>{password}</value>

</property>

<property>

<name>ssl.server.truststore.type</name>

<value>jks</value>

</property>

<property>

<name>ssl.server.truststore.reload.interval</name>

<value>10000</value>

</property>

<property>

<name>ssl.server.keystore.location</name>

<value>/var/lib/hadoop-hdfs/keystore</value>

</property>

<property>

<name>ssl.server.keystore.password</name>

<value>{password}</value>

</property>

<property>

<name>ssl.server.keystore.keypassword</name>

<value>{password}</value>

</property>

<property>

<name>ssl.server.keystore.type</name>

<value>jks</value>

</property>

<property>

<name>ssl.server.exclude.cipher.list</name>

<value>TLS_ECDHE_RSA_WITH_RC4_128_SHA,SSL_DHE_RSA_EXPORT_WITH_DES40_CBC_SHA,

SSL_RSA_WITH_DES_CBC_SHA,SSL_DHE_RSA_WITH_DES_CBC_SHA,

SSL_RSA_EXPORT_WITH_RC4_40_MD5,SSL_RSA_EXPORT_WITH_DES40_CBC_SHA,

SSL_RSA_WITH_RC4_128_MD5</value>

</property>

</configuration>

同步配置文件到所有节点:

lava scp -r -f ${HOME}/hdfshost /usr/local/oushu/conf/common/* =:/usr/local/oushu/conf/common/

启动#

在hdfs1节点,格式化ZKFailoverController

sudo -u hdfs hdfs zkfc -formatZK

使用下面的命令,启动JournalNode:

lava ssh -f ${HOME}/jnhostfile -e 'sudo -u hdfs hdfs --daemon start journalnode'

格式化并启动hdfs1上的NameNode:

sudo -u hdfs hdfs namenode -format -clusterId ss

sudo -u hdfs hdfs --daemon start namenode

在另一个NameNode hdfs2中进行同步操作,并启动NameNode:

lava ssh -h hdfs2 -e 'sudo -u hdfs hdfs namenode -bootstrapStandby'

lava ssh -h hdfs2 -e 'sudo -u hdfs hdfs --daemon start namenode'

启动所有DataNode节点:

lava ssh -f ${HOME}/dnhostfile -e 'sudo -u hdfs hdfs --daemon start datanode'

启动hdfs2上的ZKFC进程:

lava ssh -h hdfs2 -e 'sudo -u hdfs hdfs --daemon start zkfc'

启动hdfs1上的ZKFC进程,使其成为standby NameNode:

lava ssh -h hdfs1 -e 'sudo -u hdfs hdfs --daemon start zkfc'

检查状态#

检查HDFS是否成功运行:

如果开启了Kerberos认证:

su - hdfs

kinit -kt /etc/security/keytabs/hdfs.keytab hdfs@OUSHU.COM

# 一般情况下,没有报错提示就认为成功,也可以通过:

echo $?

0

# 返回值为0则是成功

HDFS集群状态确认:

su - hdfs

hdfs haadmin -getAllServiceState

命令执行后可见:

* hdfs1 active

* hdfs2 standby

hdfs dfsadmin -report

命令执行后能找到:

* Live datanodes (3):

为集群部署成功,下边进行基础功能验证

首先创建测试文本:

echo 'oushu' > /home/hdfs/test.txt

使用HDFS命令校验

hdfs dfs -put /home/hdfs/test.txt /

* 没有error

hdfs dfs -cat /test.txt

* oushu

hdfs dfs -ls /

* Found 1 items

* drwxr-xr-x - hdfs hdfs 0 2022-11-07 17:16 /test.txt

常用命令#

停止所有DataNode

lava ssh -f ${HOME}/dnhostfile -e 'sudo -u hdfs hdfs --daemon stop datanode'

停止所有JournalNode

lava ssh -f ${HOME}/jnhostfile -e 'sudo -u hdfs hdfs --daemon stop journalnode'

停止所有NameNode

lava ssh -f ${HOME}/nnhostfile -e 'sudo -u hdfs hdfs --daemon stop namenode'

注册到Skylab(可选)#

Kerberos将要安装的机器需要通过机器管理添加到skylab中,如果您尚未添加,请参考注册机器。

在hdfs1上修改/usr/local/oushu/lava/conf配置server.json,替换localhost为skylab的服务器ip,具体skylab的基础服务lava安装步骤请参考:lava安装。

然后创建~/hdfs.json文件,文件内容参考如下:

{

"data": {

"name": "HDFSCluster",

"group_roles": [

{

"role": "hdfs.activenamenode",

"cluster_name": "namenode",

"group_name": "namenode-id",

"machines": [

{

"id": 1,

"name": "NameNode1",

"subnet": "lava",

"data_ip": "192.168.1.11",

"manage_ip": "",

"assist_port": 1622,

"ssh_port": 22

}

]

},

{

"role": "hdfs.standbynamenode",

"cluster_name": "namenode",

"group_name": "namenode-id",

"machines": [

{

"id": 1,

"name": "NameNode1",

"subnet": "lava",

"data_ip": "192.168.1.11",

"manage_ip": "",

"assist_port": 1622,

"ssh_port": 22

}

]

},

{

"role": "hdfs.journalnode",

"cluster_name": "journalnode",

"group_name": "journalnode-id",

"machines": [

{

"id": 1,

"name": "journalnode1",

"subnet": "lava",

"data_ip": "192.168.1.11",

"manage_ip": "",

"assist_port": 1622,

"ssh_port": 22

},{

"id": 2,

"name": "journalnode2",

"subnet": "lava",

"data_ip": "192.168.1.12",

"manage_ip": "",

"assist_port": 1622,

"ssh_port": 22

},{

"id": 3,

"name": "journalnode3",

"subnet": "lava",

"data_ip": "192.168.1.13",

"manage_ip": "",

"assist_port": 1622,

"ssh_port": 22

}

]

},

{

"role": "hdfs.datanode",

"cluster_name": "datanode",

"group_name": "datanode-id",

"machines": [

{

"id": 1,

"name": "datanode1",

"subnet": "lava",

"data_ip": "192.168.1.11",

"manage_ip": "",

"assist_port": 1622,

"ssh_port": 22

},{

"id": 2,

"name": "datanode2",

"subnet": "lava",

"data_ip": "192.168.1.12",

"manage_ip": "",

"assist_port": 1622,

"ssh_port": 22

},{

"id": 3,

"name": "datanode3",

"subnet": "lava",

"data_ip": "192.168.1.13",

"manage_ip": "",

"assist_port": 1622,

"ssh_port": 22

}

]

}

]

}

}

上述配置文件中,需要根据实际情况修改machines数组中的机器信息,在平台基础组件lava所安装的机器执行:

psql lavaadmin -p 4432 -U oushu -c "select m.id,m.name,s.name as subnet,m.private_ip as data_ip,m.public_ip as manage_ip,m.assist_port,m.ssh_port from machine as m,subnet as s where m.subnet_id=s.id;"

获取到所需的机器信息,根据服务角色对应的节点,将机器信息添加到machines数组中。

例如hdfs1对应的HDFS NameNode角色,hdfs1的机器信息需要备添加到hdfs.namenode角色对应的machines数组中。

调用lava命令注册集群:

lava login -u oushu -p ********

lava onprem-register service -s HDFS -f ~/hdfs.json

如果返回值为:

Add service by self success

则表示注册成功,如果有错误信息,请根据错误信息处理。

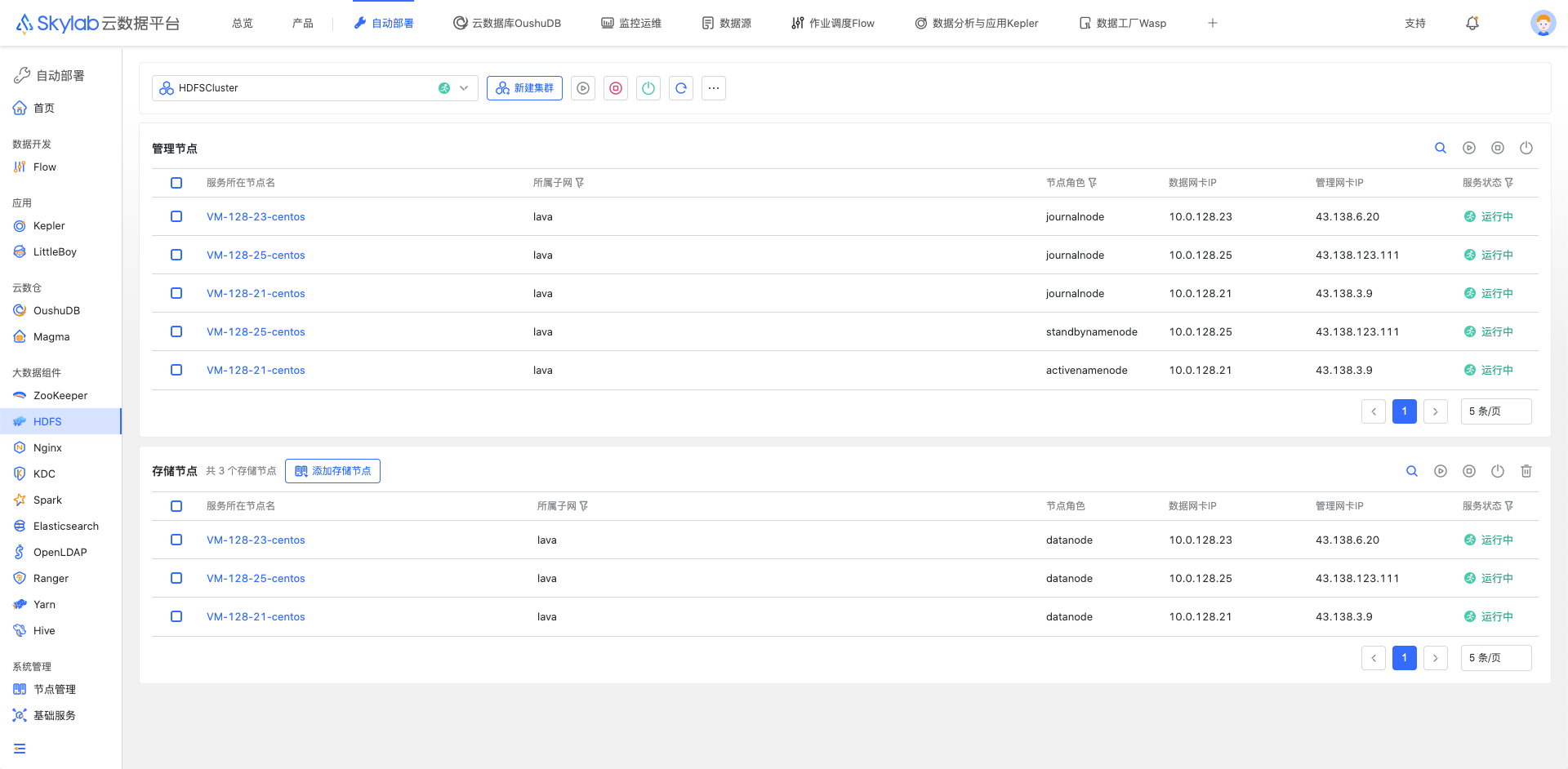

从页面登录后,在自动部署模块对应服务中可以查看到新添加的集群,同时列表中会实时监控HDFS进程在机器上的状态。

HDFS集成Ranger认证(可选)#

Ranger安装#

如果开启Ranger,则需要在所有HDFS节点安装Ranger客户端。

ssh hdfs1

sudo su root

lava ssh -f ${HOME}/hdfshost -e "yum install -y ranger-hdfs-plugin"

lava ssh -f ${HOME}/hdfshost -e 'mkdir /usr/local/oushu/hdfs/etc'

lava ssh -f ${HOME}/hdfshost -e "ln -s /usr/local/oushu/conf/hdfs/ /usr/local/oushu/hdfs/etc/hadoop"

Ranger配置#

在hdfs1节点下修改配置文件/usr/local/oushu/ranger-hdfs-plugin_2.3.0/install.properties

POLICY_MGR_URL=http://ranger1:6080

REPOSITORY_NAME=hdfsdev

COMPONENT_INSTALL_DIR_NAME=/usr/local/oushu/hdfs

同步HDFS的Ranger配置,并执行初始化配置脚本

lava scp -r -f ${HOME}/hdfshost /usr/local/oushu/ranger-hdfs-plugin_2.3.0/install.properties =:/usr/local/oushu/ranger-hdfs-plugin_2.3.0/

lava ssh -f ${HOME}/hdfshost -e '/usr/local/oushu/ranger-hdfs-plugin_2.3.0/enable-hdfs-plugin.sh'

执行完初始化脚本后,看到如下信息说明成功,并按照要求重启服务。

Ranger Plugin for hive has been enabled. Please restart hive to ensure that changes are effective.

重新启动HDFS

# 重启HDFS集群

lava ssh -f ${HOME}/nnhostfile -e 'sudo -E -u hdfs hdfs --daemon stop namenode'

lava ssh -f ${HOME}/dnhostfile -e 'sudo -E -u hdfs hdfs --daemon stop datanode'

lava ssh -f ${HOME}/jnhostfile -e 'sudo -E -u hdfs hdfs --daemon stop journalnode'

lava ssh -f ${HOME}/nnhostfile -e 'sudo -E -u hdfs hdfs --daemon start namenode'

lava ssh -f ${HOME}/dnhostfile -e 'sudo -E -u hdfs hdfs --daemon start datanode'

lava ssh -f ${HOME}/jnhostfile -e 'sudo -E -u hdfs hdfs --daemon start journalnode'

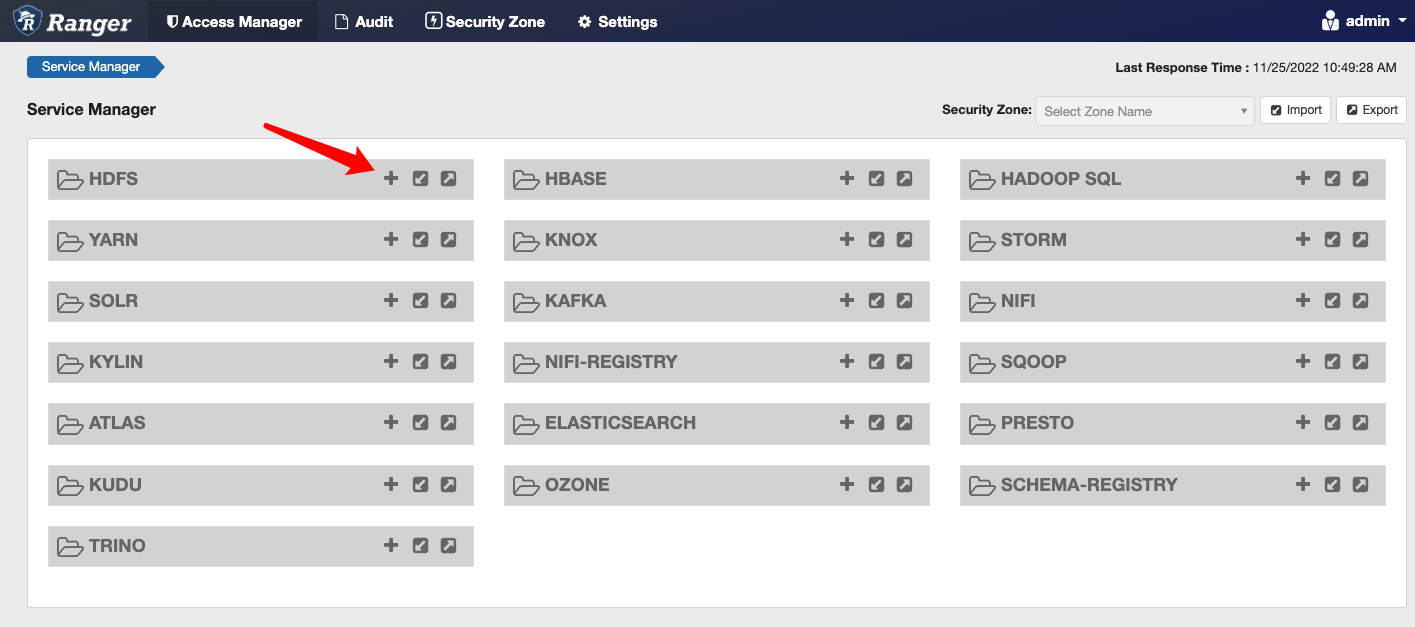

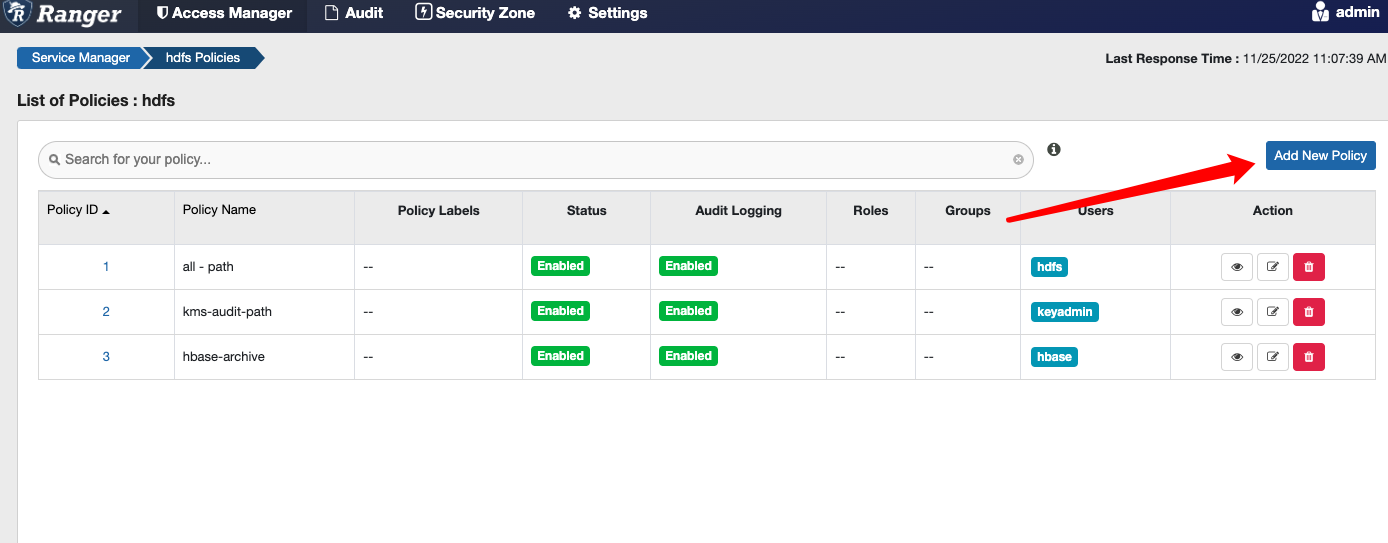

在rangerUI 上配置用户权限策略#

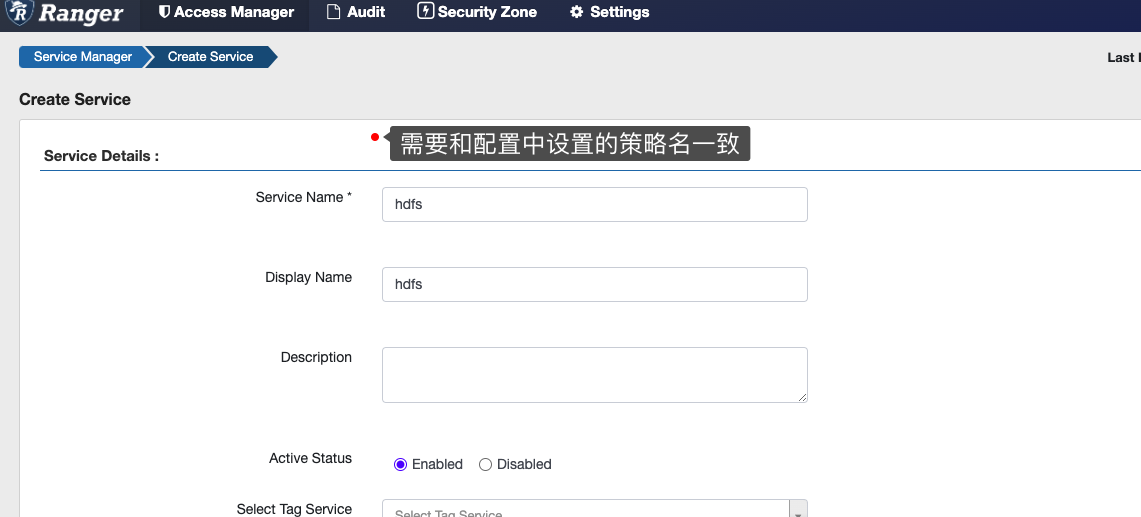

创建HDFS Service服务#

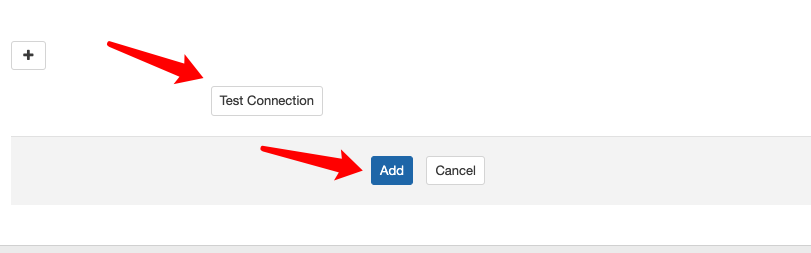

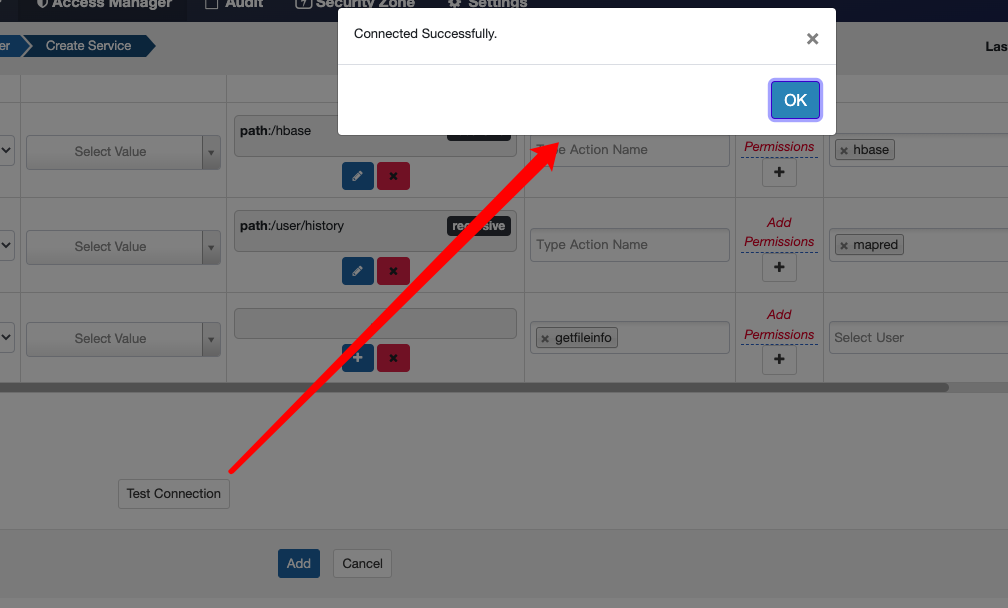

登陆rangerUI http://ranger1:6080,点击➕号添加

HDFS Service

填写服务名,注意需要和

install.properties文件里的REPOSITORY_NAME名称保持一致

用户名、密码自定义,URL使用master单点的形式填写如hdfs://master:9000,添加成功系统会识别到主备两台机。

运行测试查看是否配置正确,正确后点击添加保存。

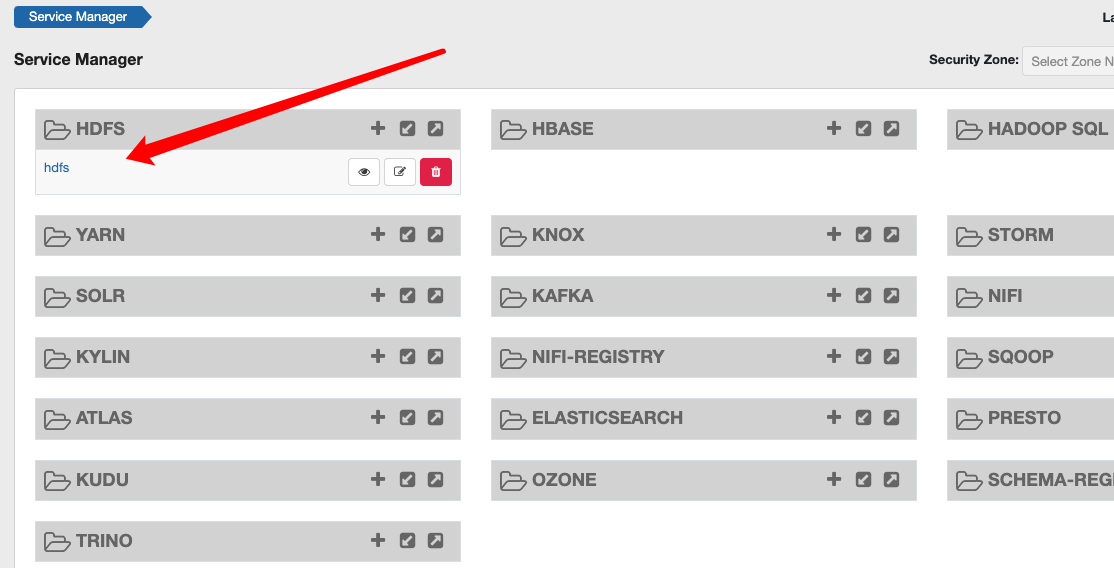

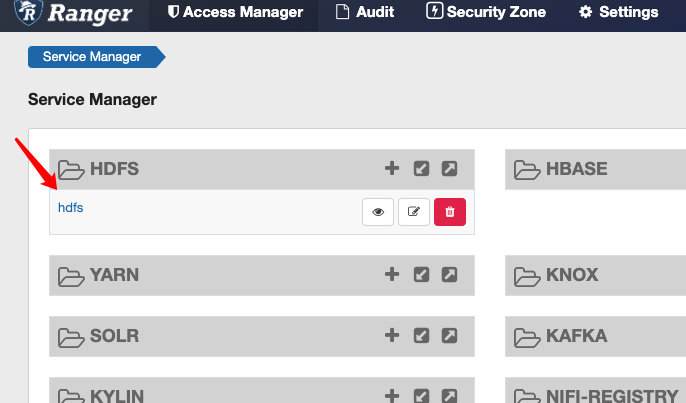

回到首页查看刚刚添加服务

创建访问测量#

找到刚刚创建的服务,点击名称

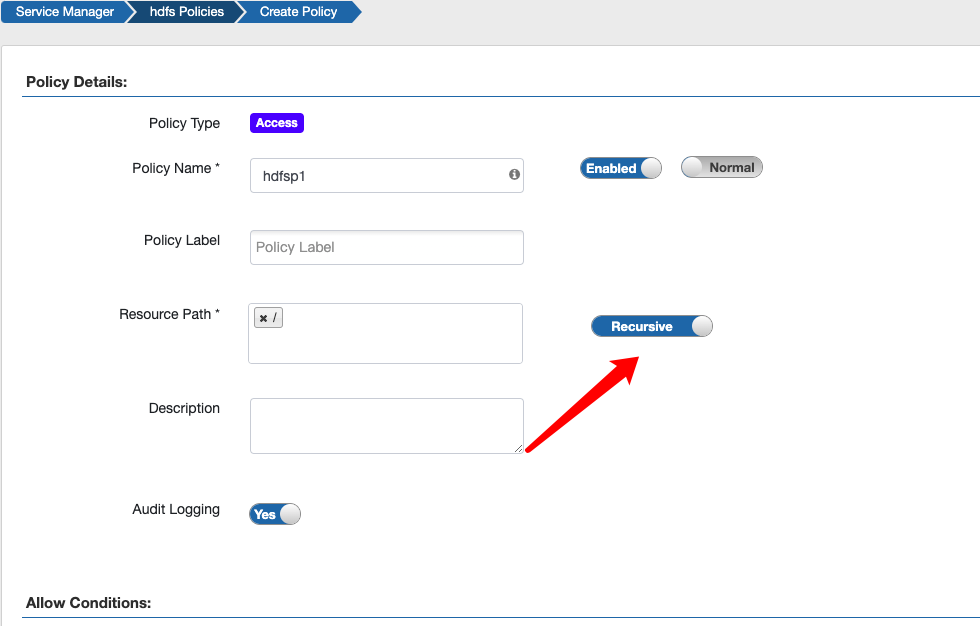

点击’Add New Policy’按钮

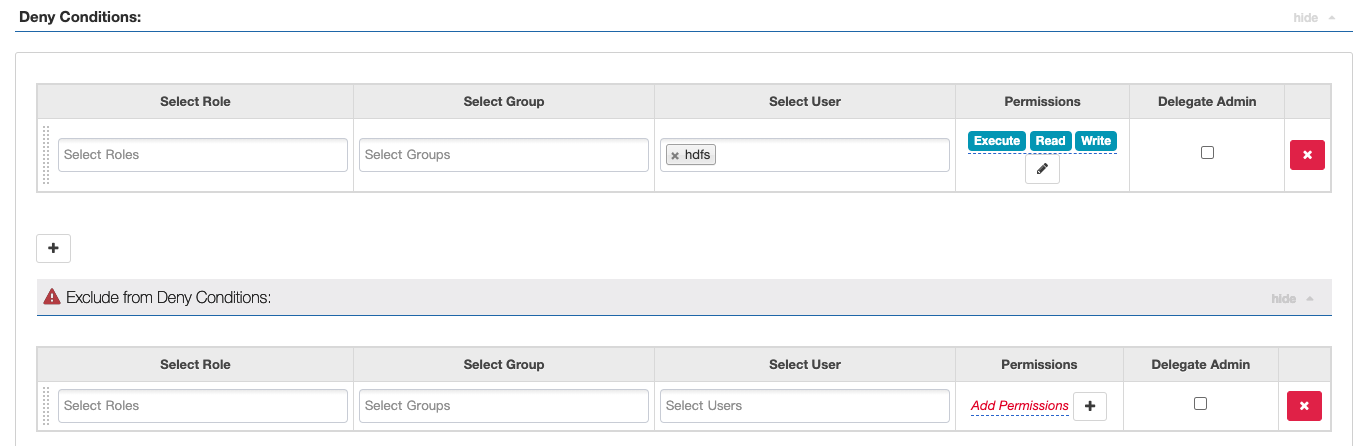

设置访问策略,使得拒绝hdfs用户在’/’下的操作权限,同时,要确保 recursive 滑块处于开启状态。并配置权限类型为拒绝权限,设置完成后点击保存。

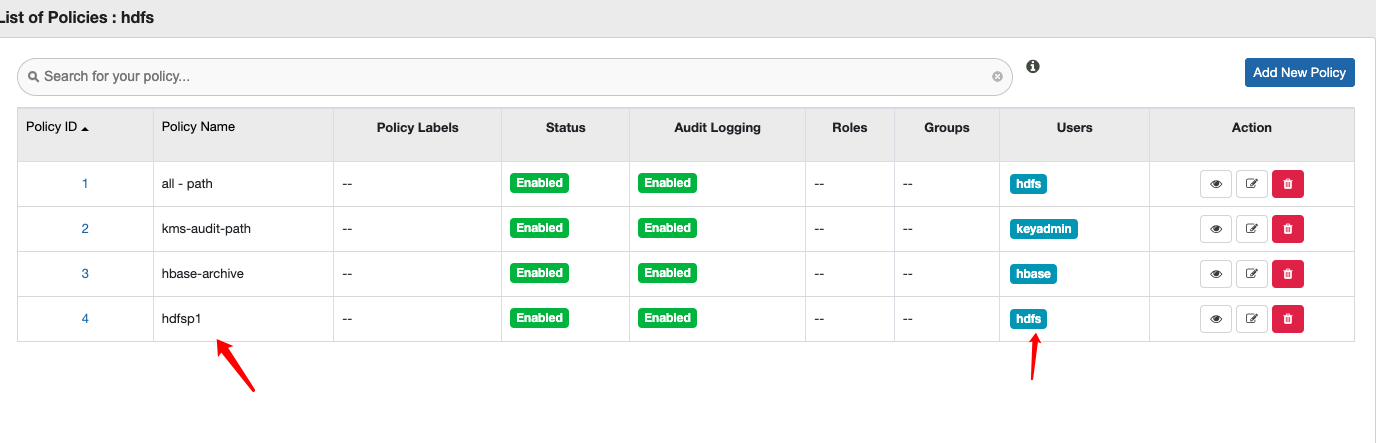

查看刚刚设置

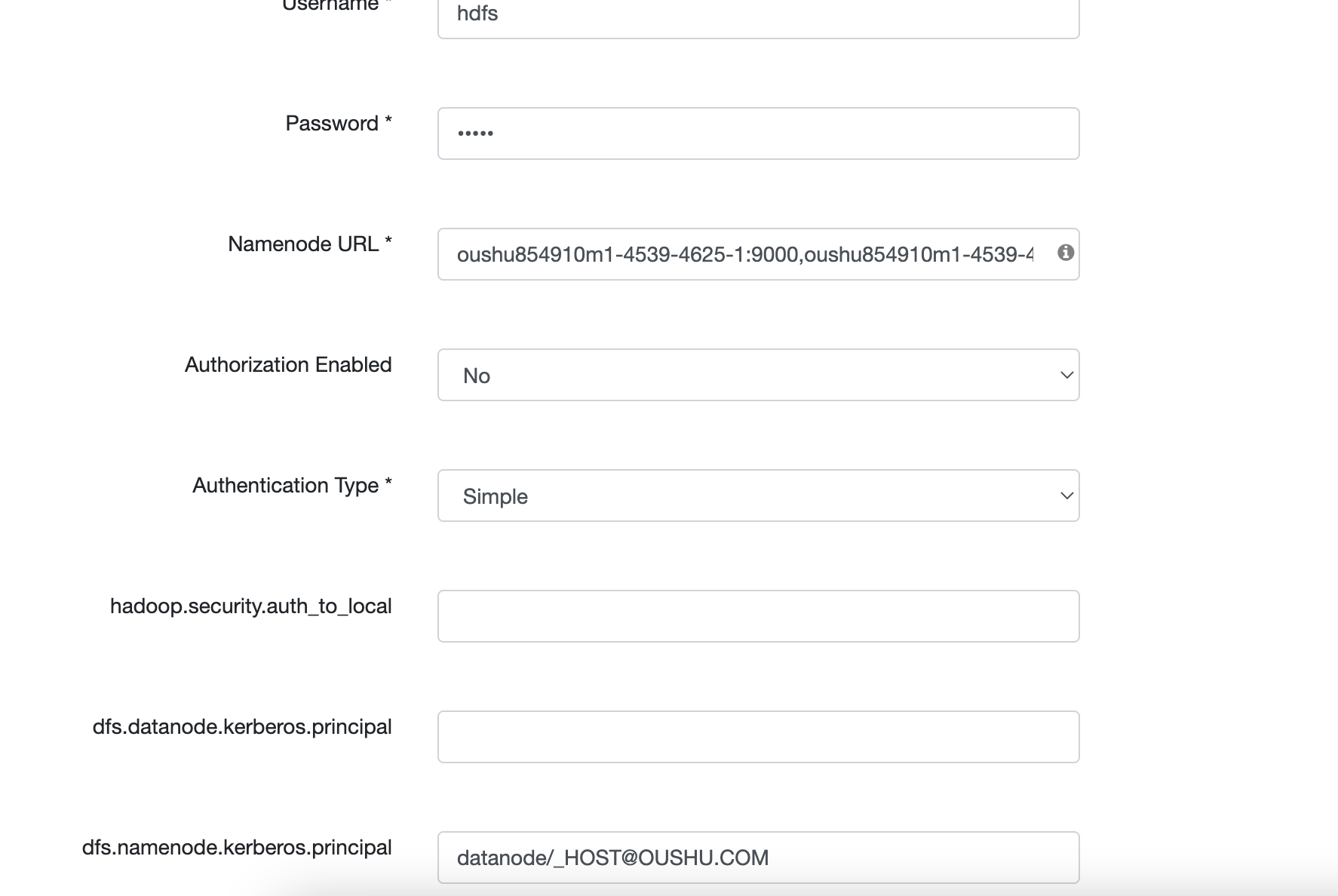

Ranger + Kerberos 注意项#

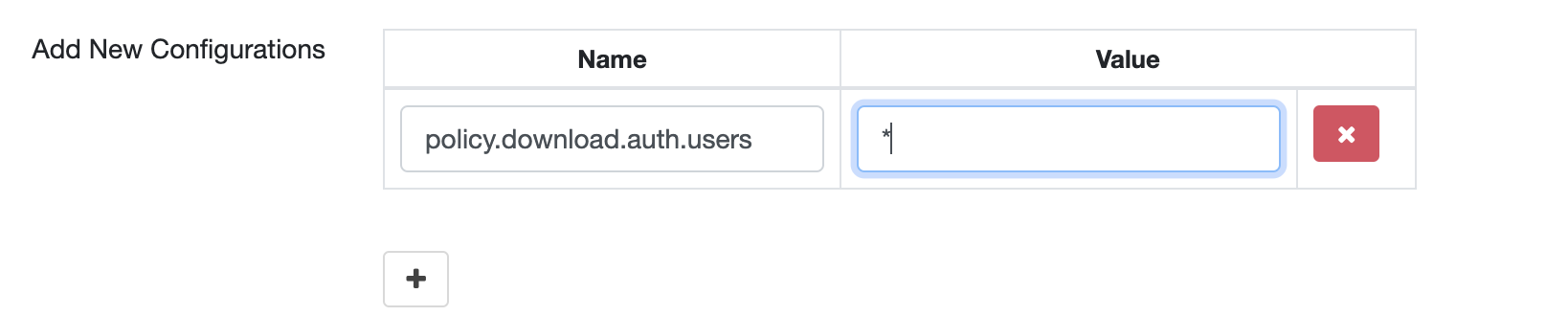

当HDFS开启Kerberos配置时,需要对Ranger服务也开启Kerberos,同时在配置HDFS repo时,加入参数如下:

参数值为配置的Kerberos实体用户名,如按照我们HDFS配置规则,为namenode。

检查效果#

登陆hdfs1机器,使用hdfs用户访问

sudo su hdfs

hdfs dfs -ls /

出下如下信息,证明生效(策略配置完可能需要一分钟生效,可以过会再试)

ls: Permission denied: user=hdfs, access=EXECUTE, inode="/"

安装HDFS Client端(可选)#

如果需要在并没有部署HDFS的机器使用HDFS命令,需要安装HDFS Client端,

HDFS Client地址假定为hdfs4,hdfs5,hdfs6,且我们已经在初始阶段配置了HDFS机器间的免密。

准备#

在hdfs1机器

ssh hdfs1

sudo su root

添加下面主机名到hdfsclienthost:

hdfs4

hdfs5

hdfs6

安装#

lava ssh -f ${HOME}/hdfsclienthost -e 'yum install -y hdfs'

lava ssh -f ${HOME}/hdfsclienthost -e 'chown -R hdfs:hadoop /usr/local/oushu/conf/common/'

lava scp -r -f ${HOME}/hdfsclienthost /usr/local/oushu/conf/common/* =:/usr/local/oushu/conf/common/

检查#

ssh hdfs4

su hdfs

hdfs dfsadmin -report

#命令执行后能找到:

* Live datanodes (3):