命令行部署#

前提#

YARN需要依赖HDFS集群, HDFS安装部署请参考:HDFS 安装。

HDFS服务地址假定为hdfs1:9000,hdfs2:9000,hdfs3:9000

ZooKeeper安装部署请参考:ZooKeeper 安装。

ZooKeeper服务地址假定为zookeeper1:2181,zookeeper2:2181,zookeeper3:2181

Kerberos认证依赖(可选)#

若开启Kerberos认证,Kerberos安装部署请参考:Kerberos 安装。

KDC服务地址假定为kdc1

Ranger认证依赖(可选)#

若开启Ranger认证,Ranger安装部署请参考:Ranger 安装。

Ranger 服务地址假定为ranger1

配置yum源并安装lava#

登录到yarn1,然后切换到root用户

ssh yarn1

su - root

配置yum源,安装lava命令行管理工具

# 从yum源所在机器(假设为192.168.1.10)获取repo文件

scp root@192.168.1.10:/etc/yum.repos.d/oushu.repo /etc/yum.repos.d/oushu.repo

# 追加yum源所在机器信息到/etc/hosts文件

# 安装lava命令行管理工具

yum clean all

yum makecache

yum install -y lava

创建一个yarnhost文件

touch yarnhost

配置yarnhost内容为YARN的所有hostname

yarn1

yarn2

yarn3

在首台机器上和集群内其他节点交换公钥,以便ssh免密码登陆和分发配置文件

# 和集群内其他机器交换公钥

lava ssh-exkeys -f ${HOME}/yarnhost -p ********

# 将repo文件分发给集群内其他机器

lava scp -f ${HOME}/yarnhost /etc/yum.repos.d/oushu.repo =:/etc/yum.repos.d

安装#

准备#

创建rmhost文件

touch rmhost

配置rmhost内容为YARN的ResourceManager节点hostname:

yarn1

yarn2

安装YARN

lava ssh -f ${HOME}/yarnhost -e 'sudo yum install -y yarn'

lava ssh -f ${HOME}/yarnhost -e 'mkdir -p /data1/yarn/nodemanager'

lava ssh -f ${HOME}/yarnhost -e 'chown -R yarn:hadoop /data1/yarn/'

lava ssh -f ${HOME}/yarnhost -e 'chmod -R 755 yarn:hadoop /data1/yarn/'

安装MapReduce(默认计算引擎,可选)

lava ssh -f ${HOME}/yarnhost -e 'sudo yum install -y mapreduce'

如需需在非YARN节点安装使用客户端,遵守HDFS章节客户端安装的步骤即可。

Kerberos准备(可选)#

如果开启Kerberos,则需要在所有YARN节点安装Kerberos客户端。

lava ssh -f ${HOME}/yarnhost -e "yum install -y krb5-libs krb5-workstation"

在yarn1节点执行下面命令进入Kerberos控制台

ssh kdc1

kadmin.loacl

进入控制台后执行下列操作

# 根据角色规划信息为每个节点的对应角色生成实例

addprinc -randkey resourcemanager/yarn1@OUSHU.COM

addprinc -randkey resourcemanager/yarn2@OUSHU.COM

addprinc -randkey nodemanager/yarn1@OUSHU.COM

addprinc -randkey nodemanager/yarn2@OUSHU.COM

addprinc -randkey nodemanager/yarn3@OUSHU.COM

addprinc -randkey HTTP/yarn1@OUSHU.COM

addprinc -randkey HTTP/yarn2@OUSHU.COM

addprinc -randkey HTTP/yarn3@OUSHU.COM

addprinc -randkey yarn@OUSHU.COM

# 为每个实例生成keytab文件

ktadd -k /etc/security/keytabs/yarn.keytab resourcemanager/yarn1@OUSHU.COM

ktadd -k /etc/security/keytabs/yarn.keytab resourcemanager/yarn2@OUSHU.COM

ktadd -k /etc/security/keytabs/yarn.keytab nodemanager/yarn1@OUSHU.COM

ktadd -k /etc/security/keytabs/yarn.keytab nodemanager/yarn2@OUSHU.COM

ktadd -k /etc/security/keytabs/yarn.keytab nodemanager/yarn3@OUSHU.COM

ktadd -k /etc/security/keytabs/yarn.keytab yarn@OUSHU.COM

# 为每个keytab文件追加HTTP实例的密钥

ktadd -k /etc/security/keytabs/yarn.keytab HTTP/yarn1@OUSHU.COM

ktadd -k /etc/security/keytabs/yarn.keytab HTTP/yarn2@OUSHU.COM

ktadd -k /etc/security/keytabs/yarn.keytab HTTP/yarn3@OUSHU.COM

# 如果使用mapreduce引擎添加下边步骤

addprinc -randkey mapreduce@OUSHU.COM

ktadd -k /etc/security/keytabs/yarn.keytab mapreduce@OUSHU.COM

# 完成退出

quit

回到yarn1并将生成的keytab进行分发:

ssh yarn1

lava ssh -f ${HOME}/yarnhost -e 'mkdir -p /etc/security/keytabs/'

scp root@kdc1:/etc/krb5.conf /etc/krb5.conf

scp root@kdc1:/etc/security/keytabs/yarn.keytab /etc/security/keytabs/yarn.keytab

lava scp -r -f ${HOME}/yarnhost /etc/krb5.conf =:/etc/krb5.conf

lava scp -r -f ${HOME}/yarnhost /etc/security/keytabs/yarn.keytab =:/etc/security/keytabs/yarn.keytab

lava ssh -f ${HOME}/yarnhost -e 'chown yarn:hadoop /etc/security/keytabs/yarn.keytab'

配置#

HA配置#

修改 YARN 配置

vim /usr/local/oushu/conf/common/yarn-site.xml

<!-- 添加下面配置 -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- 根据实际情况配置cpu和memory --> <property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>8192</value>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>2</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>1024</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>4096</value>

</property>

<!-- 配置实际zookeeper集群地址 --> <property>

<name>yarn.resourcemanager.zk-address</name>

<value>yarn1:2181,yarn2:2181,yarn3:2181</value>

</property>

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>yarn1</value>

</property>

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>yarn1</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>yarn2</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm1</name>

<value>yarn1:8088</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm2</name>

<value>yarn2:8088</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.https.address.rm1</name>

<value>yarn1:8090</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.https.address.rm2</name>

<value>yarn2:8090</value>

</property>

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.scheduler.capacity.maximum-am-resource-percent</name>

<value>0.6</value>

</property>

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>/data1/yarn/nodemanager</value>

</property>

<property>

<name>yarn.nodemanager.log-dirs</name>

<value>/usr/local/oushu/log/hadoop</value>

</property>

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

同步配置文件

# 拷贝配置文件到其他节点

lava scp -r -f ${HOME}/yarnhost /usr/local/oushu/conf/common/* =:/usr/local/oushu/conf/common/

Kerberos配置(可选)#

vim /usr/local/oushu/conf/common/yarn-site.xml

<!-- resourcemanager security config -->

<property>

<name>yarn.resourcemanager.keytab</name>

<value>/etc/security/keytabs/yarn.keytab</value>

</property>

<property>

<name>yarn.resourcemanager.principal</name>

<value>resourcemanager/_HOST@OUSHU.COM</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.spnego-principal</name>

<value>HTTP/_HOST@OUSHU.COM</value>

</property>

<property>

<name>yarn.nodemanager.keytab</name>

<value>/etc/security/keytabs/yarn.keytab</value>

</property>

<!-- nodemanager security config -->

<property>

<name>yarn.nodemanager.principal</name>

<value>nodemanager/_HOST@OUSHU.COM</value>

</property>

<!-- 如果YARN 开启 SSL -->

<property>

<name>yarn.http.policy</name>

<value>HTTPS_ONLY</value>

</property>

<property>

<name>yarn.nodemanager.container-executor.class</name>

<value>org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor</value>

</property>

<property>

<name>yarn.nodemanager.linux-container-executor.group</name>

<value>hadoop</value>

</property>

<!-- LinuxContainerExecutor脚本路径 -->

<property>

<name>yarn.nodemanager.linux-container-executor.path</name>

<value>/usr/local/oushu/yarn/bin/container-executor</value>

</property>

修改container-executor和container-executor.cfg权限#

节点的container-executor所有者和权限,要求其所有者为root,所有组为hadoop。

节点的container-executor.cfg文件的所有者和权限,要求该文件及其所有的上级目录的所有者均为root,所有组为hadoop

lava ssh -f ${HOME}/yarnhost -e 'chown root:hadoop /usr/local/oushu/yarn/bin/container-executor'

lava ssh -f ${HOME}/yarnhost -e 'chmod 6050 /usr/local/oushu/yarn/bin/container-executor'

lava ssh -f ${HOME}/yarnhost -e 'mkdir -p /etc/hadoop/'

scp /usr/local/oushu/conf/common/container-executor.cfg /etc/hadoop/

vim /etc/hadoop/container-executor.cfg

->

yarn.nodemanager.local-dirs=/data1/yarn/nodemanager

yarn.nodemanager.log-dirs=/usr/local/oushu/log/hadoop

yarn.nodemanager.linux-container-executor.group=hadoop

banned.users=

allowed.system.users=

min.user.id=1000

lava scp -r -f ${HOME}/yarnhost /etc/hadoop/container-executor.cfg =:/etc/hadoop/

lava ssh -f ${HOME}/yarnhost -e 'chown root:hadoop /etc/hadoop/container-executor.cfg'

lava ssh -f ${HOME}/yarnhost -e 'chmod 400 /etc/hadoop/container-executor.cfg'

如果使用MapReduce计算引擎

vim /usr/local/oushu/conf/common/mapred-site.xml

<configuration>

<property>

<!-- 使用yarn运行mapreduce,非本地运行 -->

<name>mapreduce.framework.name</name>

<value>yarn</value> <!-- 使用yarn运行mapred,无论是否kerberos都需要 -->

</property>

<!-- 如果mapred 开启 SSL -->

<property>

<name>mapreduce.jobhistory.http.policy</name>

<value>HTTPS_ONLY</value>

</property>

</configuration>

分发修改的配置

lava scp -r -f ${HOME}/yarnhost /usr/local/oushu/conf/common/mapred-site.xml =:/usr/local/oushu/conf/common/

lava scp -r -f ${HOME}/yarnhost /usr/local/oushu/conf/common/yarn-site.xml =:/usr/local/oushu/conf/common/

配置调优(可选)#

除默认配置外,我们在实际使用过程中可能会出现性能差,实际运行效果不理想的情况,此时我们需要考虑对YARN和Mapreduce进行调优。

一般我们需要考虑结合实际内存和CPU性能进行调整,查看内存和CPU使用情况,一般来讲资源分配的CPU核数为物理实际核数的两到三倍。

# 查看内存使用情况

$ free -h

total used free shared buff/cache available

Mem: 61G 15G 32G 273M 13G 45G

Swap: 0B 0B 0B

修改配置文件/usr/local/oushu/conf/common/mapred-site.xml,设置使用Job的内存。

<property>

<name>mapreduce.am.max-attempts</name>

<value>2</value>

</property>

<property>

<name>mapreduce.job.counters.max</name>

<value>130</value>

</property>

<property>

<name>mapreduce.job.reduce.slowstart.completedmaps</name>

<value>0.05</value>

</property>

<property>

<name>mapreduce.map.java.opts</name>

<value>-Xmx1024m</value>

</property>

<property>

<name>mapreduce.map.memory.mb</name>

<value>2048</value>

</property>

<property>

<name>mapreduce.map.sort.spill.percent</name>

<value>0.7</value>

</property>

<property>

<name>mapreduce.reduce.java.opts</name>

<value>-Xmx1024m</value>

</property>

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>2048</value>

</property>

<property>

<name>mapreduce.reduce.shuffle.input.buffer.percent</name>

<value>0.8</value>

</property>

<property>

<name>mapreduce.reduce.shuffle.merge.percent</name>

<value>0.75</value>

</property>

<property>

<name>mapreduce.reduce.shuffle.parallelcopies</name>

<value>30</value>

</property>

<property>

<name>mapreduce.reduce.speculative</name>

<value>false</value>

</property>

<property>

<name>mapreduce.task.io.sort.factor</name>

<value>100</value>

</property>

<property>

<name>mapreduce.task.io.sort.mb</name>

<value>358</value>

</property>

<property>

<name>yarn.app.mapreduce.am.command-opts</name>

<value>-Xmx1024m</value>

</property>

<property>

<name>yarn.app.mapreduce.am.resource.mb</name>

<value>2048</value>

</property>

<property>

<name>mapreduce.map.cpu.vcores</name>

<value>2</value>

</property>

<property>

<name>mapreduce.reduce.cpu.vcores</name>

<value>2</value>

</property>

<property>

<name>mapred.job.reuse.jvm.num.tasks</name>

<value>10</value>

</property>

修改配置文件/usr/local/oushu/conf/common/yarn-site.xml

//container

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>12800</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-vcores</name>

<value>8</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>512</value>

</property>

// nodemanger

<property>

<name>yarn.nodemanager.container-metrics.unregister-delay-ms</name>

<value>60000</value>

</property>

<property>

<name>yarn.nodemanager.container-monitor.interval-ms</name>

<value>3000</value>

</property>

<property>

<name>yarn.nodemanager.log-aggregation.compression-type</name>

<value>gz</value>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>8</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>123904</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>2.1</value>

</property>

//rm

<property>

<name>yarn.resourcemanager.am.max-attempts</name>

<value>2</value>

</property>

<property>

<name>yarn.resourcemanager.monitor.capacity.preemption.natural_termination_factor</name>

<value>1</value>

</property>

<property>

<name>yarn.resourcemanager.monitor.capacity.preemption.total_preemption_per_round</name>

<value>0.33</value>

</property>

<property>

<name>yarn.resourcemanager.placement-constraints.handler</name>

<value>scheduler</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>12800</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-vcores</name>

<value>8</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>512</value>

</property>

<!--

<property>

<name>yarn.nodemanager.container-executor.class</name>

<value>org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor</value>

</property>

<property>

<name>yarn.nodemanager.linux-container-executor.group</name>

<value>hadoop</value>

</property>

<property>

<name>yarn.nodemanager.linux-container-executor.nonsecure-mode.limit-users</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.linux-container-executor.nonsecure-mode.local-user</name>

<value>yarn</value>

</property>

-->

同时可以适当增加NameNode使用内存来提升效率,查看usr/local/oushu/conf/common/hadoop-env.xml

export HADOOP_NAMENODE_OPTS="-Xmx6144m -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction=70"

export HADOOP_DATANODE_OPTS="-Xmx2048m -Xss256k"

增加”-Xmx” 后的数值来增加分配内存

启动#

在yarn1节点 如果开启了Kerberos认证(可选):

su - yarn

kinit -kt /etc/security/keytabs/yarn.keytab yarn@OUSHU.COM

# 一般情况下,没有报错提示就认为成功,也可以通过:

echo $?

0

# 返回值为0则是成功

启动YARN服务

# 启动ResourceManager

lava ssh -f ${HOME}/rmhost -e 'sudo -E -u yarn yarn --daemon start resourcemanager'

# 启动NodeManager

lava ssh -f ${HOME}/yarnhost -e 'sudo -E -u yarn yarn --daemon start nodemanager'

检查状态#

YARN集群情况检查

ResourceManager整体情况

yarn rmadmin -getAllServiceState

yarn1:8033 active

yarn2:8033 standby

查看NodeManager信息

yarn node -list -all

Total Nodes:3

Node-Id Node-State Node-Http-Address Number-of-Running-Containers

yarn1:45477 RUNNING yarn1:8042 0

yarn2:38203 RUNNING yarn2:8042 0

yarn3:44035 RUNNING yarn3:8042 0

常用命令#

停止所有ResourceManager

lava ssh -f ${HOME}/rmhost -e 'sudo -u yarn yarn --daemon stop resourcemanager'

停止所有NodeManager

lava ssh -f ${HOME}/yarnhost -e 'sudo -u yarn yarn --daemon stop nodemanager'

注册到Skylab(可选)#

将要安装的机器需要通过机器管理添加到skylab中,如果您尚未添加,请参考注册机器。

在yarn1上修改/usr/local/oushu/lava/conf配置server.json,替换localhost为skylab的服务器ip,具体skylab的基础服务lava安装步骤请参考:lava安装。

然后创建~/yarn.json文件,文件内容参考如下:

{

"data": {

"name": "YARNCluster",

"group_roles": [

{

"role": "yarn.resourcemanager",

"cluster_name": "resourcemanager",

"group_name": "resourcemanager-id",

"machines": [

{

"id": 1,

"name": "ResourceManager",

"subnet": "lava",

"data_ip": "192.168.1.11",

"manage_ip": "",

"assist_port": 1622,

"ssh_port": 22

},{

"id": 2,

"name": "ResourceManager2",

"subnet": "lava",

"data_ip": "192.168.1.12",

"manage_ip": "",

"assist_port": 1622,

"ssh_port": 22

}

]

},

{

"role": "yarn.nodemanager",

"cluster_name": "nodemanager",

"group_name": "nodemanager-id",

"machines": [

{

"id": 1,

"name": "nodemanager1",

"subnet": "lava",

"data_ip": "192.168.1.11",

"manage_ip": "",

"assist_port": 1622,

"ssh_port": 22

},{

"id": 2,

"name": "nodemanager2",

"subnet": "lava",

"data_ip": "192.168.1.12",

"manage_ip": "",

"assist_port": 1622,

"ssh_port": 22

},{

"id": 3,

"name": "nodemanager3",

"subnet": "lava",

"data_ip": "192.168.1.13",

"manage_ip": "",

"assist_port": 1622,

"ssh_port": 22

}

]

}

]

}

}

上述配置文件中,需要根据实际情况修改machines数组中的机器信息,在平台基础组件lava所安装的机器执行:

psql lavaadmin -p 4432 -U oushu -c "select m.id,m.name,s.name as subnet,m.private_ip as data_ip,m.public_ip as manage_ip,m.assist_port,m.ssh_port from machine as m,subnet as s where m.subnet_id=s.id;"

获取到所需的机器信息,根据服务角色对应的节点,将机器信息添加到machines数组中。

例如yarn1对应的YARN ResourceManager角色,yarn1的机器信息需要备添加到yarn.resourcemanager角色对应的machines数组中。

调用lava命令注册集群:

lava login -u oushu -p ********

lava onprem-register service -s YarnMapreduce -f ~/yarn.json

如果返回值为:

Add service by self success

则表示注册成功,如果有错误信息,请根据错误信息处理。

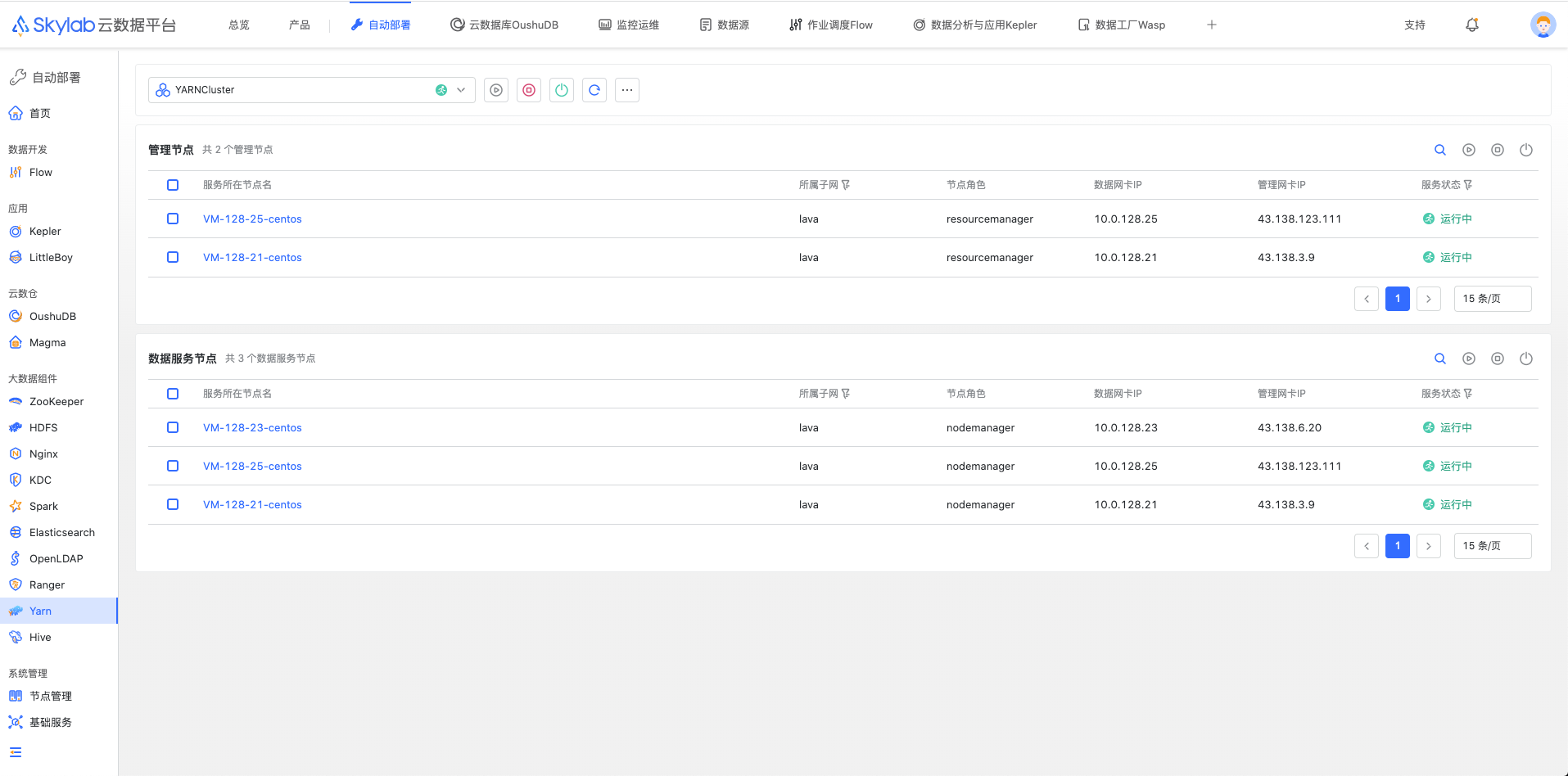

从页面登录后,在自动部署模块对应服务中可以查看到新添加的集群,同时列表中会实时监控yarn进程在机器上的状态。

YARN集成Ranger认证(可选)#

Ranger安装#

如果开启Ranger,则需要在所有YARN节点安装Ranger客户端。

lava ssh -f ${HOME}/yarnhost -e "yum install -y ranger-yarn-plugin"

lava ssh -f ${HOME}/yarnhost -e 'mkdir /usr/local/oushu/yarn/etc'

lava ssh -f ${HOME}/yarnhost -e "ln -s /usr/local/oushu/conf/yarn /usr/local/oushu/yarn/etc/hadoop"

Ranger配置#

在yarn1节点下

修改配置文件/usr/local/oushu/ranger-yarn-plugin_2.3.0/install.properties

POLICY_MGR_URL=http://ranger1:6080

REPOSITORY_NAME=yarndev

COMPONENT_INSTALL_DIR_NAME=/usr/local/oushu/yarn

同步YARN的Ranger配置,并执行初始化配置脚本

lava scp -r -f ${HOME}/yarnhost /usr/local/oushu/ranger-yarn-plugin_2.3.0/install.properties =:/usr/local/oushu/ranger-yarn-plugin_2.3.0/

lava ssh -f ${HOME}/yarnhost -e '/usr/local/oushu/ranger-yarn-plugin_2.3.0/enable-yarn-plugin.sh'

执行完初始化脚本后,看到如下信息说明成功,并按照要求重启服务。

Ranger Plugin for hive has been enabled. Please restart hive to ensure that changes are effective.

重新启动YARN

# 重启YARN集群

lava ssh -f ${HOME}/yarnhost -e 'sudo -E -u yarn yarn --daemon stop nodemanager'

lava ssh -f ${HOME}/rmhost -e 'sudo -E -u yarn yarn --daemon stop resourcemanager'

lava ssh -f ${HOME}/yarnhost -e 'sudo -E -u yarn yarn --daemon start nodemanager'

lava ssh -f ${HOME}/rmhost -e 'sudo -E -u yarn yarn --daemon start resourcemanager'

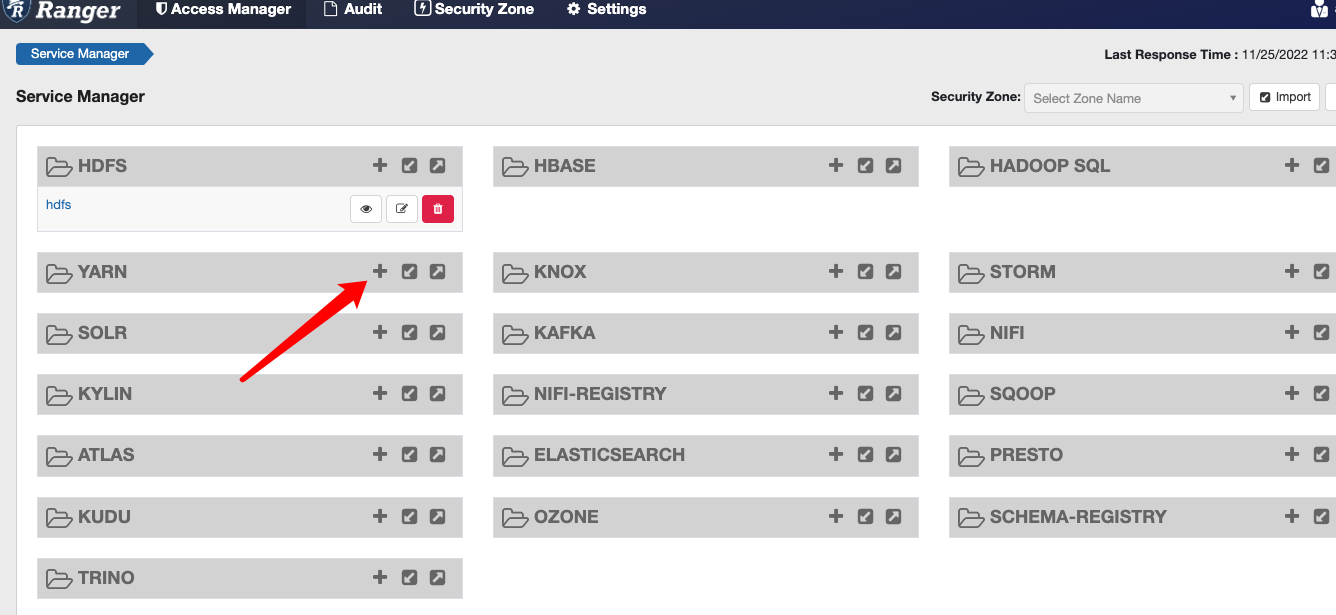

在rangerUI 上配置用户权限策略#

创建YARN Service服务#

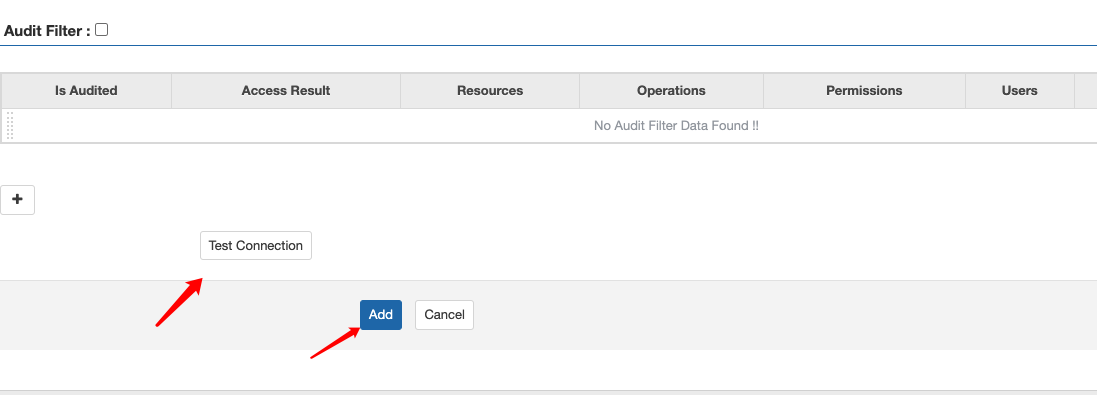

登陆rangerUI http://192.168.1.14:6080,点击➕号添加

YARN Service

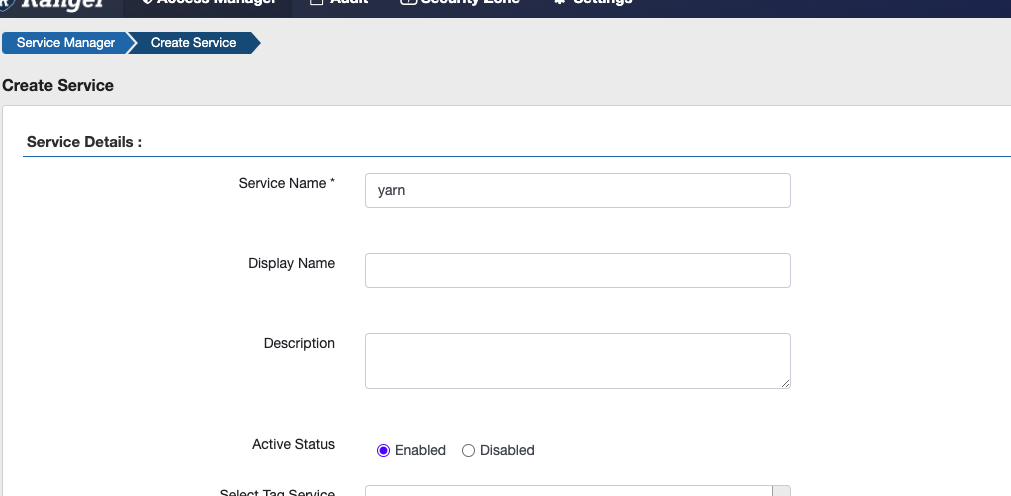

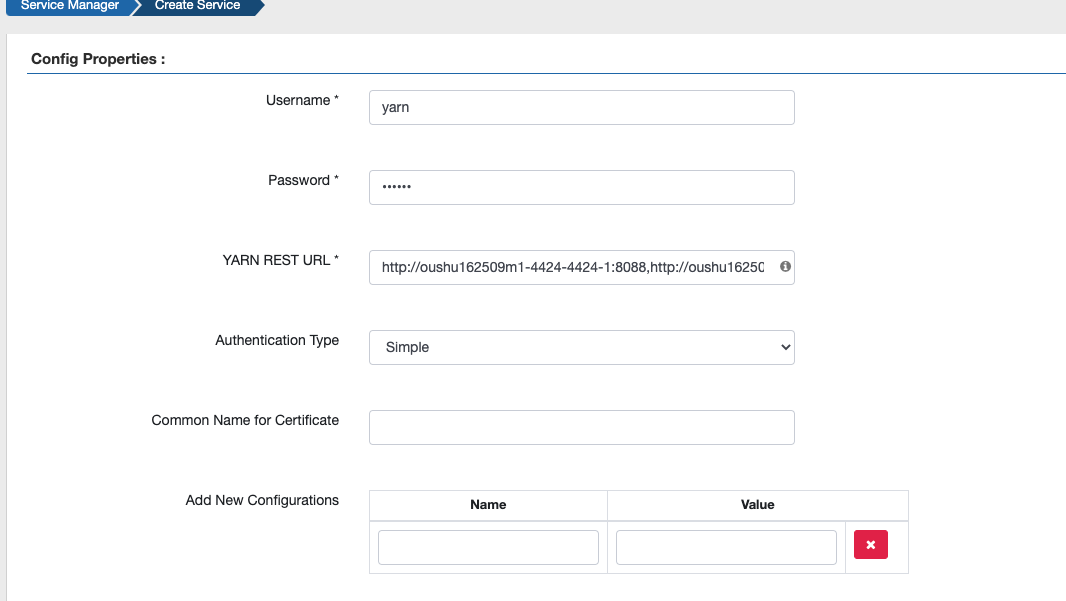

填写服务名,注意需要和

install.properties文件里的REPOSITORY_NAME名称保持一致

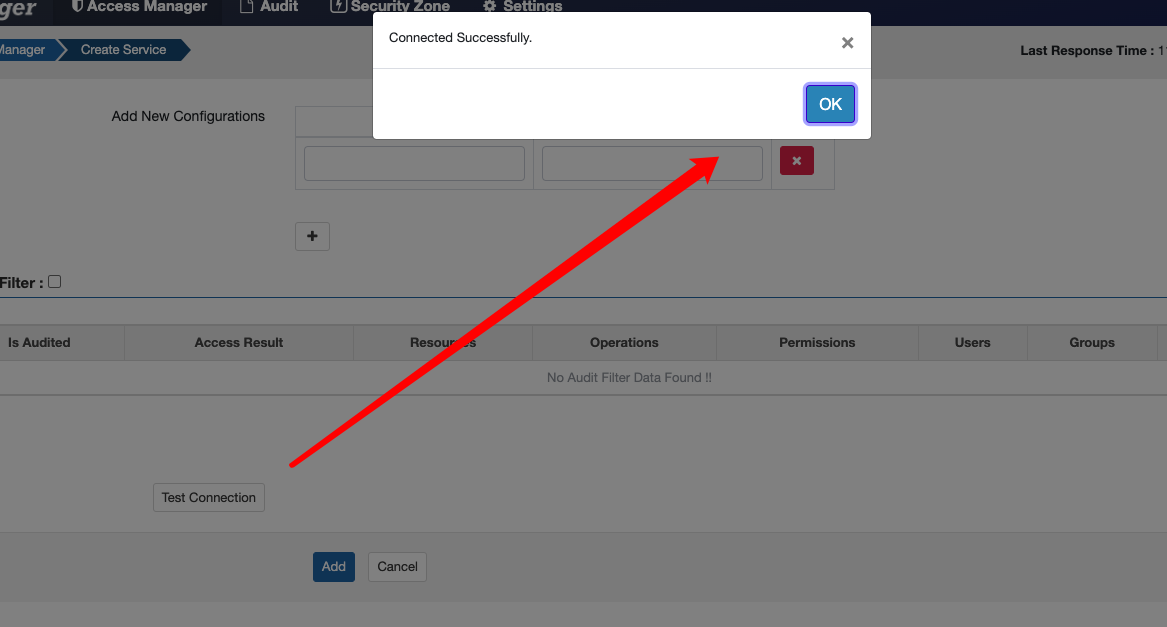

用户名、密码自定义,若开启HA则HA方式把ResourceManager链接方式全部写入,若kerberos开启了kerberos认证,则填写相应的keytab文件,否则使用默认配置

运行测试查看是否配置正确,正确后点击添加保存。

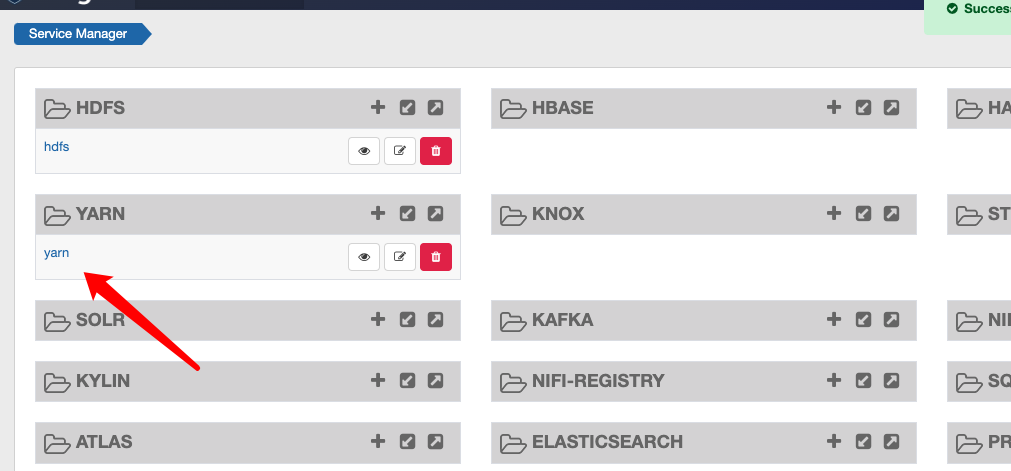

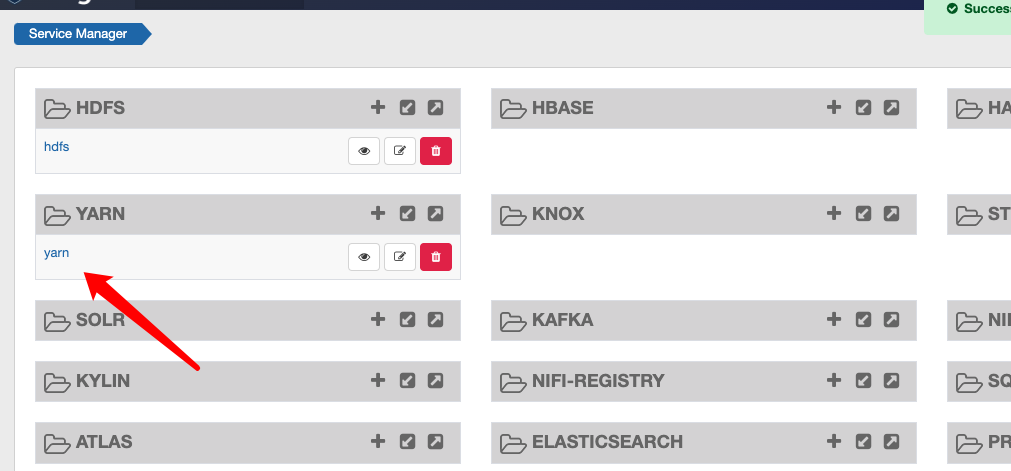

回到首页查看刚刚添加服务

创建访问测量#

找到刚刚创建的服务,点击名称

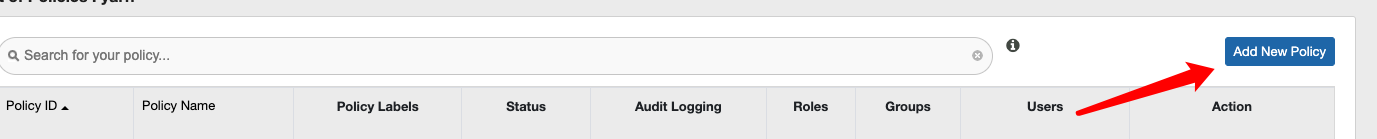

点击’Add New Policy’按钮

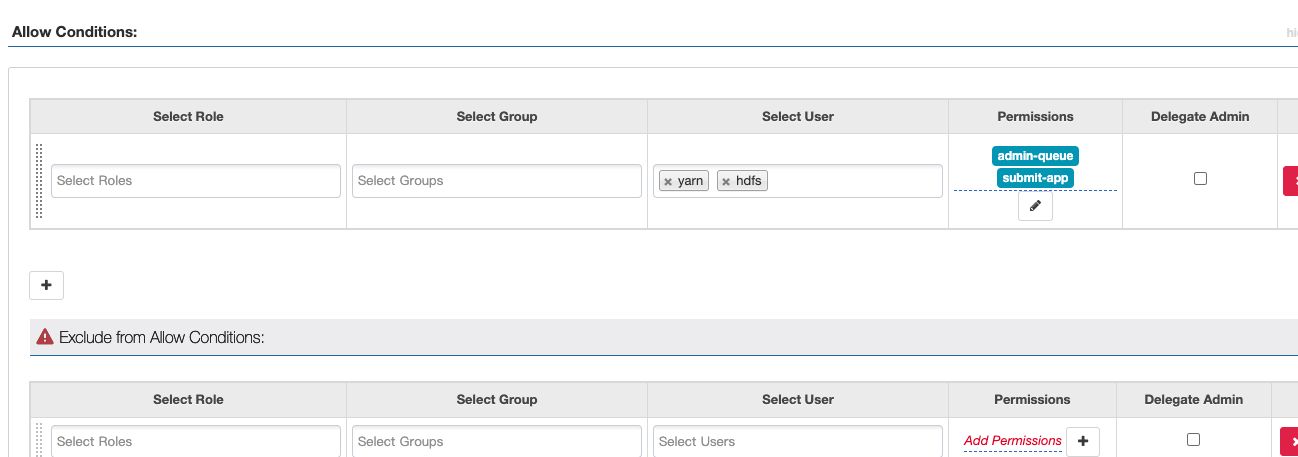

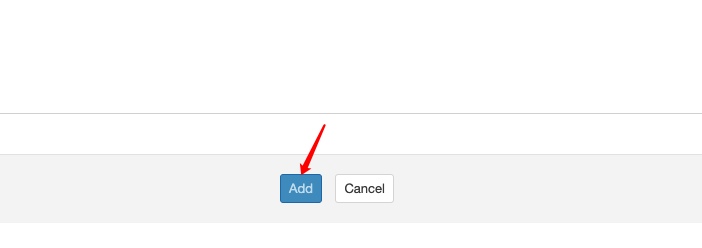

设置访问策略,使得yarn用户有提交资源队列的权限,同时,要确保recursive 滑块处于开启状态。

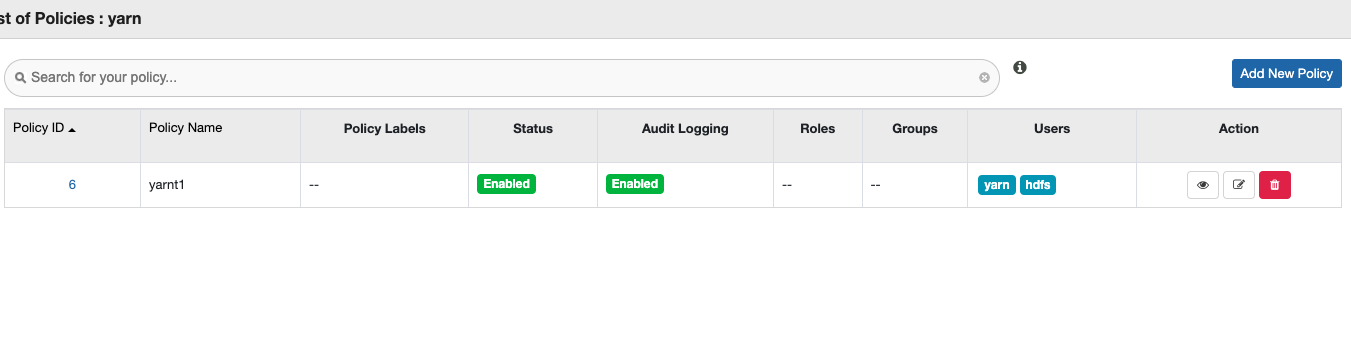

查看刚刚设置

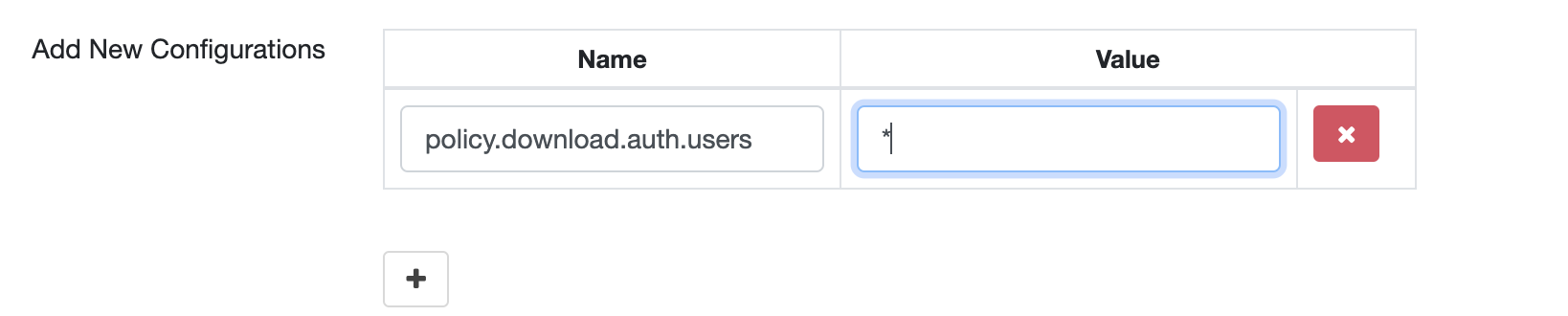

Ranger + Kerberos 注意项#

当开启Kerberos配置时,需要对Ranger服务也开启Kerberos,同时在配置YARN repo时,加入参数如下:

参数值为配置的Kerberos实体用户名。

添加完Policy后,稍等约半分钟等待Policy生效。生效后使用yarn用户就可以向YARN的root.default队列中提交、删除、查询作业等操作。