命令行部署#

前提#

首先登录到zookeeper1,然后切换到root用户

ssh zookeeper1

su - root

在开始前,请配置yum源,安装lava命令行管理工具

# 从yum源所在机器(假设为192.168.1.10)获取repo文件

scp root@192.168.1.10:/etc/yum.repos.d/oushu.repo /etc/yum.repos.d/oushu.repo

# 追加yum源所在机器信息到/etc/hosts文件

# 安装lava命令行管理工具

yum clean all

yum makecache

yum install -y lava

创建一个zkhostfile包含要安装ZooKeeper的机器:

touch ${HOME}/zkhostfile

添加下面主机名到zkhostfile,该文件记录安装ZooKeeper的主机名称:

zookeeper1

zookeeper2

zookeeper3

交换key并分发repo,在首台机器上和集群内其他节点交换公钥,以便ssh免密码登陆和分发配置文件

# 和集群内其他机器交换公钥

lava ssh-exkeys -f ${HOME}/zkhostfile -p ********

# 将repo文件分发给集群内其他机器

lava scp -f ${HOME}/zkhostfile /etc/yum.repos.d/oushu.repo =:/etc/yum.repos.d

Kerberos认证依赖(可选)#

若开启Kerberos认证,Kerberos安装部署请参考:Kerberos 安装。

Kerberos 服务地址假定为kdc1

准备#

检查机器hosts设置

cat /etc/hosts

127.0.0.1 localhost.localdomain localhost

127.0.0.1 localhost4.localdomain4 localhost4

10.0.128.13 zookeeper1

10.0.128.5 zookeeper2

10.0.128.8 zookeeper3

如果hosts文件中包含行形如下面信息,删除此行:

127.0.0.1 hostname hostname

kerberos准备(可选)#

如果开启Kerberos,则需要在所有HDFS节点安装Kerberos客户端。

lava ssh -f ${HOME}/hdfshost -e "yum install -y krb5-libs krb5-workstation"

登录到kdc1机器上

ssh kdc1

mkdir -p /etc/security/keytabs

kadmin.local

创建ZooKeeper相关用户,生成的ZooKeeper.keytab。

注意:这里对应的hostname不管大小写,都需要用小写

登录kdc

kadmin.local

addprinc -randkey zookeeper/zookeeper1@OUSHU.COM

addprinc -randkey zookeeper/zookeeper2@OUSHU.COM

addprinc -randkey zookeeper/zookeeper3@OUSHU.COM

addprinc -randkey HTTP/zookeeper1@OUSHU.COM

addprinc -randkey HTTP/zookeeper2@OUSHU.COM

addprinc -randkey HTTP/zookeeper3@OUSHU.COM

ktadd -k /etc/security/keytabs/zookeeper.keytab zookeeper/zookeeper1@OUSHU.COM

ktadd -k /etc/security/keytabs/zookeeper.keytab zookeeper/zookeeper2@OUSHU.COM

ktadd -k /etc/security/keytabs/zookeeper.keytab zookeeper/zookeeper3@OUSHU.COM

ktadd -norandkey -k /etc/security/keytabs/zookeeper.keytab HTTP/zookeeper1@OUSHU.COM

ktadd -norandkey -k /etc/security/keytabs/zookeeper.keytab HTTP/zookeeper2@OUSHU.COM

ktadd -norandkey -k /etc/security/keytabs/zookeeper.keytab HTTP/zookeeper3@OUSHU.COM

生成的keytab进行分发:

ssh zookeeper1

lava ssh -f ${HOME}/hdfshost -e 'mkdir -p /etc/security/keytabs/'

scp root@kdc1:/etc/krb5.conf /etc/krb5.conf

scp root@kdc1:/etc/security/keytabs/zookeeper.keytab /etc/security/keytabs/zookeeper.keytab

lava scp -r -f ${HOME}/zkhostfile /etc/krb5.conf =:/etc/krb5.conf

lava scp -r -f ${HOME}/zkhostfile /etc/security/keytabs/hdfs.keytab =:/etc/security/keytabs/zookeeper.keytab

lava ssh -f ${HOME}/zkhostfile -e 'chown zookeeper:hadoop /etc/security/keytabs/zookeeper.keytab'

安装#

使用yum进行安装

lava ssh -f ${HOME}/zkhostfile -e 'yum install -y zookeeper'

创建ZooKeeper数据目录:

lava ssh -f ${HOME}/zkhostfile -e 'mkdir -p /data1/zookeeper/data'

lava ssh -f ${HOME}/zkhostfile -e 'chown -R zookeeper:zookeeper /data1/zookeeper'

分别在三台配置了ZooKeeper的节点上配置myid文件,分别节点zookeeper1、zookeeper2、zookeeper3创建文件myid,内容分别是1、2和3,然后为zookeeper用户加上权限:

echo 1 > myid

lava ssh -h zookeeper1 ./myid =:/data1/zookeeper/data

echo 2 > myid

lava ssh -h zookeeper2 ./myid =:/data1/zookeeper/data

echo 3 > myid

lava ssh -h zookeeper3 ./myid =:/data1/zookeeper/data

lava ssh -f ${HOME}/zkhostfile -e "chown -R zookeeper:zookeeper /data1/zookeeper/data"

配置#

修改在/usr/local/oushu/conf/zookeeper/下的配置文件zoo.cfg,添加集群信息:

clientPort=2181

server.1=zookeeper1:2888:3888

server.2=zookeeper2:2888:3888

server.3=zookeeper3:2888:3888

kerberos配置(可选)#

修改在/usr/local/oushu/conf/zookeeper/下的配置文件zoo.cfg,添加一下信息:

authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

jaasLoginRenew=3600000

并在/usr/local/oushu/conf/zookeeper/目录下分别创建server-jaas.conf文件和client-jaas.conf文件并分别配置如下参数:

vim /usr/local/oushu/conf/zookeeper/server-jaas.conf

Server {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="/etc/security/keytabs/zookeeper.keytab"

storeKey=true

useTicketCache=false

principal="zookeeper/zookeeper1@OUSHU.COM";

};

vim /usr/local/oushu/conf/zookeeper/client-jaas.conf

Client {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="/etc/security/keytabs/zookeeper.keytab"

storeKey=true

useTicketCache=false

principal="zookeeper/zookeeper1@OUSHU.COM";

};

在/usr/local/oushu/conf/zookeeper/java.env文件,添加以下内容:

export JVMFLAGS="-Djava.security.auth.login.config=/usr/local/oushu/conf/zookeeper/server-jaas.conf"

当zkClient单独部署的时候可以如下使用,同样适用于使用了zk的客户端都其他组件。

如hive开启kerberos连接zk时,需要在hive-env.sh中添加,后重启hive。

export CLIENT_JVMFLAGS="-Djava.security.auth.login.config=/usr/local/oushu/conf/zookeeper/client-jaas.conf"

启动#

同步配置文件后启动ZooKeeper

lava scp -r -f ${HOME}/zkhostfile /usr/local/oushu/conf/zookeeper/* =:/usr/local/oushu/conf/zookeeper/

lava ssh -f ${HOME}/zkhostfile -e 'sudo -u zookeeper /usr/local/oushu/zookeeper/bin/zkServer.sh start'

检查#

查看所有ZooKeeper状态

lava ssh -f ${HOME}/zkhostfile -e 'sudo -u zookeeper /usr/local/oushu/zookeeper/bin/zkServer.sh status'

ZooKeeper JMX enabled by default

Using config:/usr/local/oushu/zookeeper/../conf/zookeeper/zoo.cfg

Client port found: 2181. Client address: zookeeper1.

Mode:leader

ZooKeeper JMX enabled by default

Using config:/usr/local/oushu/zookeeper/../conf/zookeeper/zoo.cfg

Client port found: 2181. Client address: zookeeper2.

Mode:follower

ZooKeeper JMX enabled by default

Using config:/usr/local/oushu/zookeeper/../conf/zookeeper/zoo.cfg

Client port found: 2181. Client address: zookeeper3.

Mode:follower

常用命令#

Zookeeper停止

lava ssh -f ${HOME}/zkhostfile -e 'sudo -u zookeeper /usr/local/oushu/zookeeper/bin/zkServer.sh stop'

进入客户端

sudo -u zookeeper /usr/local/oushu/zookeeper/bin/zkCli.sh

[zk: 127.0.0.1:2181(CONNECTED) 0]

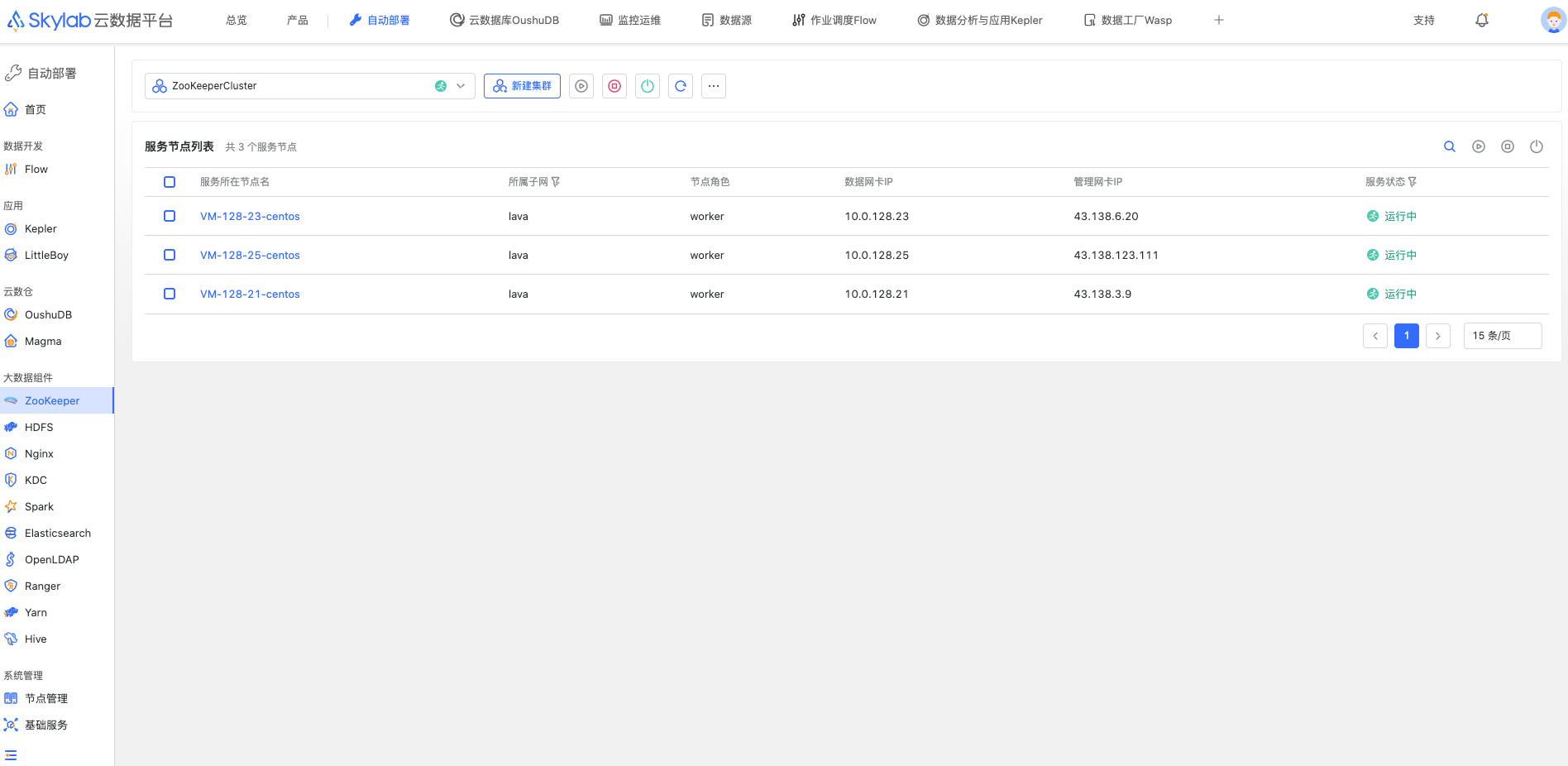

注册到Skylab(可选)#

Kerberos将要安装的机器需要通过机器管理添加到skylab中,如果您尚未添加,请参考注册机器。

在zookeeper1上修改/usr/local/oushu/lava/conf配置server.json,替换localhost为skylab的服务器ip,具体skylab的基础服务lava安装步骤请参考:lava安装。

然后创建~/zookeeper.json文件,文件内容参考如下:

{

"data": {

"name": "ZooKeeperCluster",

"group_roles": [

{

"role": "zk.worker",

"cluster_name": "ZooKeeper-id",

"group_name": "ZooKeeper",

"machines": [

{

"id": 1,

"name": "zookeeper1",

"subnet": "lava",

"data_ip": "192.168.1.11",

"manage_ip": "",

"assist_port": 1622,

"ssh_port": 22

},

{

"id": 2,

"name": "zookeeper2",

"subnet": "lava",

"data_ip": "192.168.1.12",

"manage_ip": "",

"assist_port": 1622,

"ssh_port": 22

},{

"id": 3,

"name": "zookeeper3",

"subnet": "lava",

"data_ip": "192.168.1.13",

"manage_ip": "",

"assist_port": 1622,

"ssh_port": 22

}

]

}

]

}

}

上述配置文件中,需要根据实际情况修改machines数组中的机器信息,在平台基础组件lava所安装的机器执行:

psql lavaadmin -p 4432 -U oushu -c "select m.id,m.name,s.name as subnet,m.private_ip as data_ip,m.public_ip as manage_ip,m.assist_port,m.ssh_port from machine as m,subnet as s where m.subnet_id=s.id;"

获取到所需的机器信息,根据服务角色对应的节点,将机器信息添加到machines数组中。

例如zookeeper1对应的ZooKeeper Worker角色,zookeeper1的机器信息需要备添加到zookeeper.worker角色对应的machines数组中。

调用lava命令注册集群:

lava login -u oushu -p ********

lava onprem-register service -s ZooKeeper -f ~/zookeeper.json

如果返回值为:

Add service by self success

则表示注册成功,如果有错误信息,请根据错误信息处理。

从页面登录后,在自动部署模块对应服务中可以查看到新添加的集群,同时列表中会实时监控ZooKeeper进程在机器上的状态。